In the end, here is what I did with help of friends

#Importing all the necessary libraries

from collections import Counter

import matplotlib.pyplot as plt

import numpy as np

import string

#Opening/reading/editing file

filename=raw_input('Filename (e.g. yourfile.txt): ')

cond=raw_input('What do you want to count? \n A) Words.\n B) Characters and Punctuation. \n Choice: ')

file=open(filename,'r')

#'r' allows us to read the file

text=file.read()

#This allows us to view the entire text and assign it as a gigantic string

text=text.lower()

'''We make the entire case lowercase to account for any words that have a capital letter due to sentence structure'''

if cond in ['A','a','A)','a)']:

set=['!', '#', '"', '%', '$',"''" '&', ')', '(', '+', '*', '--', ',', '/', '.', ';', ':', '=', '<', '?', '>', '@', '[', ']', '\\', '_', '^', '`', '{', '}', '|', '~']

text="".join(l for l in text if l not in set)

'''Hyphenated words are secure, since the text has set '--' as the dash.'''

#Splitting the text into sepereate words, thus creating a big string array.

text=text.split()

#We then use the Counter function to calculate the frequency of each word appearing in the text.

count=Counter(text)

'''This is not enough, since count is now a function dependant from speicifc strings. We use the .most_common function to create an array which contains the word and it's frequency in each element.'''

count=count.most_common()

#Creating empty arrays, replace the 0 with our frequency values and plot it. Along with the experimental data, we will take the averaged proportionality constant (K) and plot the curve y=K/x

y=np.arange(len(count))

x=np.arange(1,len(count)+1)

yn=["" for m in range(len(count))]

'''it is important to change the range from 1 to len(count), since the value 'Rank' always starts from 1.'''

for i in range(len(count)):

y[i]=count[i][1]

yn[i]=count[i][0]

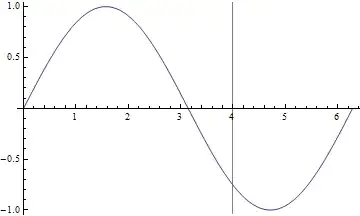

K,Ks=round(np.average(x*y),2),round(np.std(x*y),2)

plt.plot(x,y,color='red',linewidth=3)

plt.plot(x,K/x,color='green',linewidth=2)

plt.xlabel('Rank')

plt.ticklabel_format(style='sci', axis='x', scilimits=(0,0))

plt.ticklabel_format(style='sci', axis='y', scilimits=(0,0))

plt.plot(0,0,'o',alpha=0)

plt.ylabel('Frequency')

plt.grid(True)

plt.title("Testing Zipf's Law: the relationship between the frequency and rank of a word in a text")

plt.legend(['Experimental data', 'y=K/x, K=%s, $\delta_{K}$ = %s'%(K,Ks), 'Most used word=%s, least used=%s'%(count[0],count[-1])], loc='best',numpoints=1)

plt.show()

elif cond in ['B','b','B)','b)']:

text=text.translate( None, string.whitespace )

count=Counter(text)

count=count.most_common()

y=np.arange(len(count))

x=np.arange(1,len(count)+1)

yn=["" for m in range(len(count))]

for i in range(len(count)):

y[i]=count[i][1]

yn[i]=count[i][0]

K,Ks=round(np.average(x*y),2),round(np.std(x*y),2)

plt.plot(x,y,color='red',linewidth=3)

plt.plot(x,K/x,color='green',linewidth=2)

plt.xlabel('Rank')

plt.ticklabel_format(style='sci', axis='x', scilimits=(0,0))

plt.ticklabel_format(style='sci', axis='y', scilimits=(0,0))

plt.plot(0,0,'o',alpha=0)

plt.ylabel('Frequency')

plt.grid(True)

plt.title("Testing Zipf's Law: the relationship between the frequency and rank of a character/punctuation, in a text")

plt.legend(['Experimental data', 'y=K/x, K=%s, $\delta_{K}$ = %s'%(K,Ks), 'Most used character=%s, least used=%s'%(count[0],count[-1])], loc='best',numpoints=1)

plt.show()