Trying to read csv file into pandas dataframe with the following formatting

dp = pd.read_csv('products.csv', header = 0, dtype = {'name': str,'review': str,

'rating': int,'word_count': dict}, engine = 'c')

print dp.shape

for col in dp.columns:

print 'column', col,':', type(col[0])

print type(dp['rating'][0])

dp.head(3)

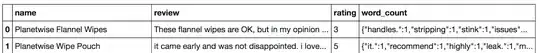

This is the output:

(183531, 4)

column name : <type 'str'>

column review : <type 'str'>

column rating : <type 'str'>

column word_count : <type 'str'>

<type 'numpy.int64'>

I can sort of understand that pandas might be finding it difficult to convert a string representation of a dictionary into a dictionary given this and this. But how can the content of the "rating" column be both str and numpy.int64???

By the way, tweaks like not specifying an engine or header do not change anything.

Thanks and regards