For debugging purposes, I'd like to see the approximate memory size of each living variable in my python script, because a variable is growing up quickly in size (or multiple variables are created but not destroyed).

EDIT 1:

Here is the output of Guppy-PE (total variables size ~54MB) at the moment when used memory grows by 6GB.

Partition of a set of 370715 objects. Total size = 54137008 bytes.

Index Count % Size % Cumulative % Kind (class / dict of class)

0 151088 41 16953320 31 16953320 31 str

1 91788 25 8121344 15 25074664 46 tuple

2 16472 4 4979464 9 30054128 56 unicode

3 4699 1 3118216 6 33172344 61 dict (no owner)

4 895 0 3100648 6 36272992 67 dict of module

5 23616 6 3022848 6 39295840 73 types.CodeType

6 23707 6 2844840 5 42140680 78 function

7 2412 1 2303520 4 44444200 82 dict of type

8 2412 1 2178768 4 46622968 86 type

9 12733 3 1805096 3 48428064 89 list

<925 more rows. Type e.g. '_.more' to view.>

I suspect a library (Wand) that uses an external lib(libmagickwand-dev). but don't know at what level in my code this is happening.

is there a better way to debug this kind of problems ?

EDIT 2

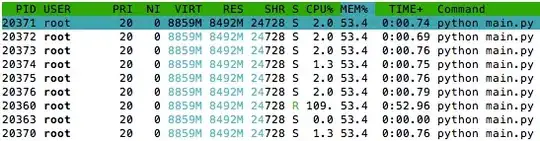

I can see those 6GB in htop but not in Guppy-PE: