I find myself consistently facing this problem in a couple of different scenarios. So I thought about sharing it here and see if there is an optimal way to solve it.

Suppose that I have a big array of whatever X and an another array of the same size of X called y that has on it the label to whose x belongs. So like the following.

X = np.array(['obect1', 'object2', 'object3', 'object4', 'object5'])

y = np.array([0, 1, 1, 0, 2])

What I desire is a to build a dictionary / hash that uses the set of labels as keys and the indexes of all the objects with those labels in X as items. So in this case the desired output will be:

{0: (array([0, 3]),), 1: (array([1, 2]),), 2: (array([4]),)}

Note that actually what is on X does not matter but I included it for the sake of completeness.

Now, my naive solution for the problem is iterate throughout all the labels and use np.where==label to build the dictionary. In more detail, I use this function:

def get_key_to_indexes_dic(labels):

"""

Builds a dictionary whose keys are the labels and whose

items are all the indexes that have that particular key

"""

# Get the unique labels and initialize the dictionary

label_set = set(labels)

key_to_indexes = {}

for label in label_set:

key_to_indexes[label] = np.where(labels==label)

return key_to_indexes

So now the core of my question: Is there a way to do better? is there a natural way to solve this using numpy functions? is my approach misguided somehow?

As a lateral matter of less importance: what is the complexity of the solution in the definition above? I believe that the complexity of the solution is the following:

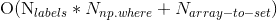

Or in words the number of labels times the complexity of using np.where in a set of the size of y plus the complexity of making a set out of an array. Is this correct?

P.D. I could not find related post with this specific question, if you have suggestions to change the title or anything I would be grateful.