I have been comparing the relative efficiency of numpy versus Python list comprehensions in multiplying together arrays of random numbers. (Python 3.4/Spyder, Windows and Ubuntu).

As one would expect, for all but the smallest arrays, numpy rapidly outperforms an list comprehension, and for increasing array length you get the expected sigmoid curve for performance. But the sigmoid is far from smooth, which I am puzzling to understand.

Obviously there is a certain amount of quantization noise for shorter array lengths, but I am getting unexpectedly noisy results, particularly under Windows. The figures are the mean of 100 runs of the various array lengths, so should have any transient effects smoothed out (so I would have thought).

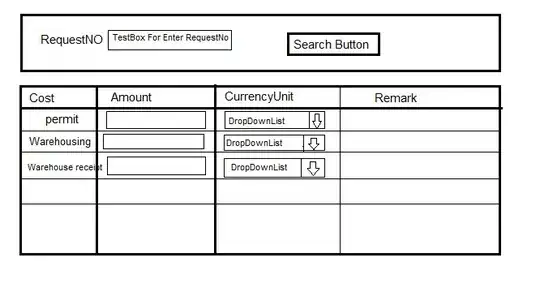

Numpy and Python list performance comparison

The figures below show the ratio of multiplying arrays of differing lengths using numpy against list comprehension.

Array Length Windows Ubuntu

1 0.2 0.4

2 2.0 0.6

5 1.0 0.5

10 3.0 1.0

20 0.3 0.8

50 3.5 1.9

100 3.5 1.9

200 10.0 3.0

500 4.6 6.0

1,000 13.6 6.9

2,000 9.2 8.2

5,000 14.6 10.4

10,000 12.1 11.1

20,000 12.9 11.6

50,000 13.4 11.4

100,000 13.4 12.0

200,000 12.8 12.4

500,000 13.0 12.3

1,000,000 13.3 12.4

2,000,000 13.6 12.0

5,000,000 13.6 11.9

So I guess my question is can anyone explain why the results, particularly under Windows are so noisy. I have run the tests multiple times but the results always seem to be exactly the same.

UPDATE. At Reblochon Masque's suggestion I have disabled grabage collection. Which smooths the Windows performance out somewhat, but the curves are still lumpy.

Numpy and Python list performance comparison

(Updated to remove garbage collection)

Array Length Windows Ubuntu

1 0.1 0.3

2 0.6 0.4

5 0.3 0.4

10 0.5 0.5

20 0.6 0.5

50 0.8 0.7

100 1.6 1.1

200 1.3 1.7

500 3.7 3.2

1,000 3.9 4.8

2,000 6.5 6.6

5,000 11.5 9.2

10,000 10.8 10.7

20,000 12.1 11.4

50,000 13.3 12.4

100,000 13.5 12.6

200,000 12.8 12.6

500,000 12.9 12.3

1,000,000 13.3 12.3

2,000,000 13.6 12.0

5,000,000 13.6 11.8

UPDATE

At @Sid's suggestion, I've restricted it to running on a single core on each machine. The curves are slightly smoother (particularly the Linux one), but still with the inflexions and some noise, particularly under Windows.

(It was actually the inflexions that I was originally going to post about, as they appear consistently in the same places.)

Numpy and Python list performance comparison

(Garbage collection disabled and running on 1 CPU)

Array Length Windows Ubuntu

1 0.3 0.3

2 0.0 0.4

5 0.5 0.4

10 0.6 0.5

20 0.3 0.5

50 0.9 0.7

100 1.0 1.1

200 2.8 1.7

500 3.7 3.3

1,000 3.3 4.7

2,000 6.5 6.7

5,000 11.0 9.6

10,000 11.0 11.1

20,000 12.7 11.8

50,000 12.9 12.8

100,000 14.3 13.0

200,000 12.6 13.1

500,000 12.6 12.6

1,000,000 13.0 12.6

2,000,000 13.4 12.4

5,000,000 13.6 12.2