I'm using the scikit-learn method MDS to perform a dimensionality reduction in some data. I would like to check the stress value to access the quality of the reduction. I was expecting something between 0 - 1. However, I got values outside this range. Here's a minimal example:

%matplotlib inline

from sklearn.preprocessing import normalize

from sklearn import manifold

from matplotlib import pyplot as plt

from matplotlib.lines import Line2D

import numpy

def similarity_measure(vec1, vec2):

vec1_x = numpy.arctan2(vec1[1], vec1[0])

vec2_x = numpy.arctan2(vec2[1], vec2[0])

vec1_y = numpy.sqrt(numpy.sum(vec1[0] * vec1[0] + vec1[1] * vec1[1]))

vec2_y = numpy.sqrt(numpy.sum(vec2[0] * vec2[0] + vec2[1] * vec2[1]))

dot = numpy.sum(vec1_x * vec2_x + vec1_y * vec2_y)

mag1 = numpy.sqrt(numpy.sum(vec1_x * vec1_x + vec1_y * vec1_y))

mag2 = numpy.sqrt(numpy.sum(vec2_x * vec2_x + vec2_y * vec2_y))

return dot / (mag1 * mag2)

plt.figure(figsize=(15, 15))

delta = numpy.zeros((100, 100))

data_x = numpy.random.randint(0, 100, (100, 100))

data_y = numpy.random.randint(0, 100, (100, 100))

for j in range(100):

for k in range(100):

if j <= k:

dist = similarity_measure((data_x[j].flatten(), data_y[j].flatten()), (data_x[k].flatten(), data_y[k].flatten()))

delta[j, k] = delta[k, j] = dist

delta = 1-((delta+1)/2)

delta /= numpy.max(delta)

mds = manifold.MDS(n_components=2, max_iter=3000, eps=1e-9, random_state=0,

dissimilarity="precomputed", n_jobs=1)

coords = mds.fit(delta).embedding_

print mds.stress_

plt.scatter(coords[:, 0], coords[:, 1], marker='x', s=50, edgecolor='None')

plt.tight_layout()

Which, in my test, printed the following:

263.412196461

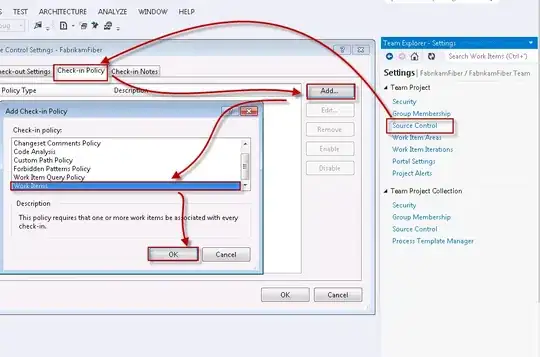

And produced this image:

How can I analyze this value, without knowing the maximum value? Or how to normalize it, to have it between 0 and 1?

Thank you.