In a program I am working on, I need to multiply two matrices repeatedly. Because of the size of one of the matrices, this operation takes some time and I wanted to see which method would be the most efficient. The matrices have dimensions (m x n)*(n x p) where m = n = 3 and 10^5 < p < 10^6.

With the exception of Numpy, which I assume works with an optimized algorithm, every test consists of a simple implementation of the matrix multiplication:

Below are my various implementations:

Python

def dot_py(A,B):

m, n = A.shape

p = B.shape[1]

C = np.zeros((m,p))

for i in range(0,m):

for j in range(0,p):

for k in range(0,n):

C[i,j] += A[i,k]*B[k,j]

return C

Numpy

def dot_np(A,B):

C = np.dot(A,B)

return C

Numba

The code is the same as the Python one, but it is compiled just in time before being used:

dot_nb = nb.jit(nb.float64[:,:](nb.float64[:,:], nb.float64[:,:]), nopython = True)(dot_py)

So far, each method call has been timed using the timeit module 10 times. The best result is kept. The matrices are created using np.random.rand(n,m).

C++

mat2 dot(const mat2& m1, const mat2& m2)

{

int m = m1.rows_;

int n = m1.cols_;

int p = m2.cols_;

mat2 m3(m,p);

for (int row = 0; row < m; row++) {

for (int col = 0; col < p; col++) {

for (int k = 0; k < n; k++) {

m3.data_[p*row + col] += m1.data_[n*row + k]*m2.data_[p*k + col];

}

}

}

return m3;

}

Here, mat2 is a custom class that I defined and dot(const mat2& m1, const mat2& m2) is a friend function to this class. It is timed using QPF and QPC from Windows.h and the program is compiled using MinGW with the g++ command. Again, the best time obtained from 10 executions is kept.

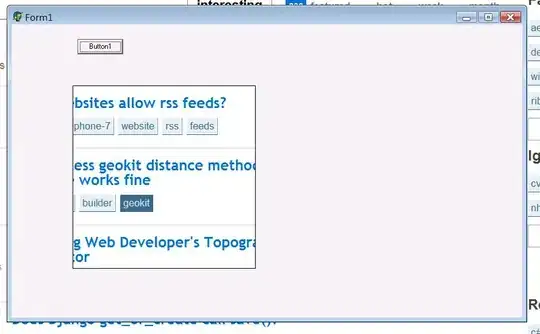

Results

As expected, the simple Python code is slower but it still beats Numpy for very small matrices. Numba turns out to be about 30% faster than Numpy for the largest cases.

I am surprised with the C++ results, where the multiplication takes almost an order of magnitude more time than with Numba. In fact, I expected these to take a similar amount of time.

This leads to my main question: Is this normal and if not, why is C++ slower that Numba? I just started learning C++ so I might be doing something wrong. If so, what would be my mistake, or what could I do to improve the efficiency of my code (other than choosing a better algorithm) ?

EDIT 1

Here is the header of the mat2 class.

#ifndef MAT2_H

#define MAT2_H

#include <iostream>

class mat2

{

private:

int rows_, cols_;

float* data_;

public:

mat2() {} // (default) constructor

mat2(int rows, int cols, float value = 0); // constructor

mat2(const mat2& other); // copy constructor

~mat2(); // destructor

// Operators

mat2& operator=(mat2 other); // assignment operator

float operator()(int row, int col) const;

float& operator() (int row, int col);

mat2 operator*(const mat2& other);

// Operations

friend mat2 dot(const mat2& m1, const mat2& m2);

// Other

friend void swap(mat2& first, mat2& second);

friend std::ostream& operator<<(std::ostream& os, const mat2& M);

};

#endif

Edit 2

As many suggested, using the optimization flag was the missing element to match Numba. Below are the new curves compared to the previous ones. The curve tagged v2 was obtained by switching the two inner loops and shows another 30% to 50% improvement.