I need to perform a million API calls in under an hour (The server can handle this much traffic), for this, I'm using node to run multiple requests in parallel, but when I try to run ~1000 concurrent requests I keep getting these errors:

EAI_AGAIN

{ [Error: getaddrinfo EAI_AGAIN google.com:80]

code: 'EAI_AGAIN',

errno: 'EAI_AGAIN',

syscall: 'getaddrinfo',

hostname: 'google.com',

host: 'google.com',

port: 80 }

ECONNRESET

{

[Error: read ECONNRESET] code: 'ECONNRESET',

errno: 'ECONNRESET',

syscall: 'read'

}

How can I prevent this from happening, without reducing the requests to 500?

Here's a code sample that works perfectly when running 500 requests at a time, but fails when the limit is over 1000. (Your limit may be different).

"use strict";

const http = require('http');

http.globalAgent.maxSockets = Infinity;

const async = require('async');

const request = require("request");

let success = 0;

let error = 0;

function iterateAsync() {

let rows = [];

for (let i = 0; i < 500; i++) {

rows.push(i);

}

console.time("Requests");

async.each(

rows,

(item, callback) => get(callback),

(err) => {

console.log("Failed: " + error);

console.log("Success: " + success);

console.timeEnd("Requests");

});

}

function get(callback) {

request("http://example.com", (err, response, body) => {

if (err) {

console.log(err);

error++;

return callback();

}

success++;

callback();

});

}

iterateAsync();

I added http.globalAgent.maxSockets = Infinity; even though is the default value.

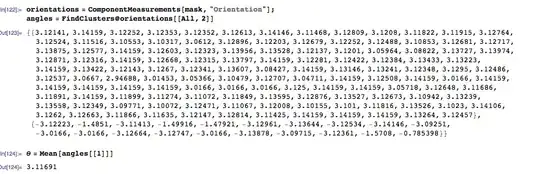

500 requests

1000 requests

Aditional info:

I'm running the tests on ubuntu 14.04 & 15.04 and I modified file-max, tcp_fin_timeout, tcp_tw_reuse, ip_local_port_range default values in /etc/sysctl.conf as described in this post

- file-max: 100000

- tcp_fin_timeout: 15

- tcp_tw_reuse: 1

- ip_local_port_range: 10000 65000

Added following lines in /etc/security/limits.conf

* soft nofile 100000

* hard nofile 100000

With this values, I'm still getting the error.

I've read all other similar posts:

- httpd - Increase number of concurrent requests

- Thousands of concurrent http requests in node

- Increasing the maximum number of tcp/ip connections in linux

Is there a way I can know the exact number of concurrent requests that my system can manage?