I am running a python script which creates a list of commands which should be executed by a compiled program (proprietary).

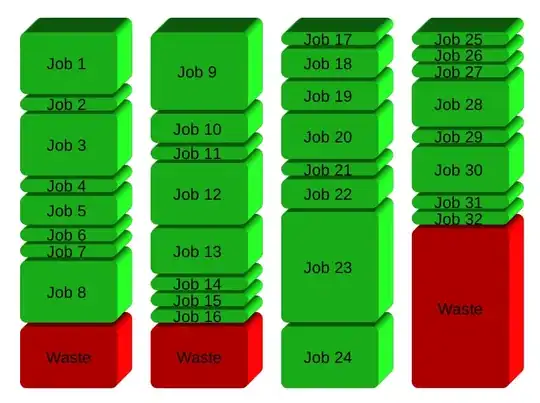

The program kan split some of the calculations to run independently and the data will then be collected afterwards.

I would like to run these calculations in parallel as each are a very time consuming single threaded task and I have 16 cores available.

I am using subprocess to execute the commands (in Class environment):

def run_local(self):

p = Popen(["someExecutable"], stdout=PIPE, stdin=PIPE)

p.stdin.write(self.exec_string)

p.stdin.flush()

while(p.poll() is not none):

line = p.stdout.readline()

self.log(line)

Where self.exec_string is a string of all the commands.

This string an be split into: an initial part, the part i want parallelised and a finishing part.

How should i go about this?

Also it seems the executable will "hang" (waiting for a command, eg. "exit" which will release the memory) if a naive copy-paste of the current method is used for each part.

Bonus: The executable also has the option to run a bash script of commands, if it is easier/possible to parallelise bash?