I consider an example from Optimizing Assembly from Agner Fog. He tests :

Example 12.6b. DAXPY algorithm, 32-bit mode

L1:

movapd xmm1, [esi+eax] ; X[i], X[i+1]

mulpd xmm1, xmm2 ; X[i] * DA, X[i+1] * DA

movapd xmm0, [edi+eax] ; Y[i], Y[i+1]

subpd xmm0, xmm1 ; Y[i]-X[i]*DA, Y[i+1]-X[i+1]*DA

movapd [edi+eax], xmm0 ; Store result

add eax, 16 ; Add size of two elements to index

cmp eax, ecx ; Compare with n*8

jl L1 ; Loop back

and he estimates ~6 cycle/iteration on Pentium M. I tried to make the same but on my CPU- Ivy Bridge. And I achieved 3 cycle per iteration but from my computations on paper it is possible to get 2 cycles. And I don't know if I made mistake in theorethical computations or it can be improved.

What I did:

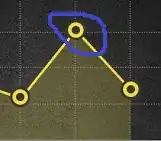

From http://www.realworldtech.com/sandy-bridge/5/ I know that my CPU can retire 4 microops for cycle so it is not a bottleneck.

#uops fused = 8 / 4 = 2

So 2 is a current our bottleneck. Let's see another possibilites:

Microps have pattern: 1-1-1-1-1-1-1-1 and according to Agner Fog my CPU has pattern 1-1-1-1 ( and others). From that we can see that it is possible to decode instructions in 2 cycle. It is no bottleneck. Moreover, SnB cpus have microcache so neither fetching nor decoding should be the bottleneck.

Size of instruction in bytes is 32 so it fit into micro-cache window ( 32 bytes).

From my experiments, when I add nop instruction it increase number of cycle per iteratrion ( approx 0.5 cycle).

So, the question is:

Where is that ONE cycle? :D