I am training DLIB's shape_predictor for 194 face landmarks using helen dataset which is used to detect face landmarks through face_landmark_detection_ex.cpp of dlib library.

Now it gave me an sp.dat binary file of around 45 MB which is less compared to file given (http://sourceforge.net/projects/dclib/files/dlib/v18.10/shape_predictor_68_face_landmarks.dat.bz2) for 68 face landmarks. In training

- Mean training error : 0.0203811

- Mean testing error : 0.0204511

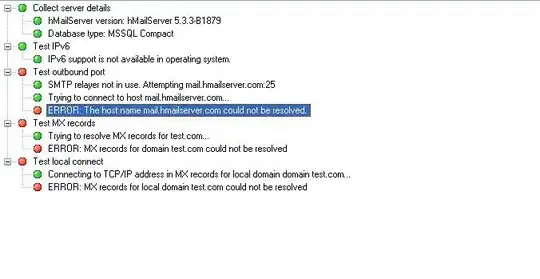

and when I used trained data to get face landmarks position, IN result I got..

which are very deviated from the result got from 68 landmarks

68 landmark image:

Why?