The redefinition(s) of this question makes it hard to deal with; particularly so as some (now removed) criteria would render some solutions to the current problem impractical.

I remember when your data came from multiple CSV files; some containing temperatures and some containing pressures. In fact there was so much data that it could conceivably 'spill' over to another worksheet. This fact alone renders individual worksheet value comparisons impractical. Even if it all fit on a single worksheet, comparing a million datetimes with a second set of a million datetimes and removing entries that do not fit both categories is going to be an arduous and time-consuming task.

Arduous and time-consuming tasks are best processed 'in-memory'. Repeatedly returning to the worksheet(s) to compare values is going to bog down processing and should be avoided unless absolutely necessary.

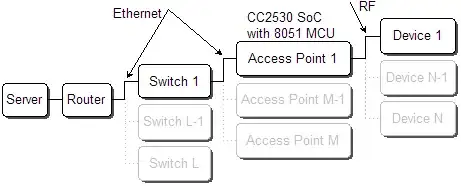

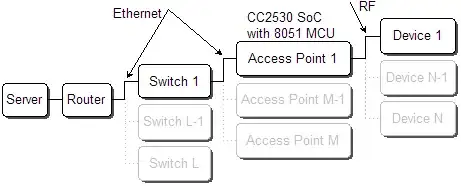

This seems like it should be an SQL question where two different sets of CSVs are loaded into two temporary but consolidated database tables and indexed on their respective datetimes. An INNER JOIN could then be performed to build a third table of matching records. Easy-peasy.

But this is an excel / vba question and should be answered in kind.

A VBA Scripting.Dictionary object is like an in-memory database table and comes with a unique primary 'index' called the key. It also has a single additional 'field' of the variant type which can receive any style of value or values that a variant can. Loading two dictionaries with the respective values (one for temperatures and another for the pressures) using the datetimes as the keys would seem to be the most efficient method of combining the two.

Sample data

I started with several CSVs similar to the following.

Temperaturen-25.csv SPS-25.csv

Three temperature CSVs and three pressure CSVs totalled ~300K records (~150K each) with periods of intentionally missing datetimes from each.

Module2 (Code)

Option Explicit

'public constant dictating the maximum number of entries per worksheet (never set higher than Rows.Count-3)

Public Const iMAXROWS As Long = 50000

Sub main()

Dim fp As String, fn As String, tmp As Variant

Dim dt As Variant, tdic As Object, pdic As Object

Dim tpwb As Workbook, a As Long, d As Long, w As Long

'apptggl btggl:=false 'uncomment this when you have finished debugging

'create 2 dictionary objects to receive ALL of the data

Set tdic = CreateObject("Scripting.Dictionary")

Set pdic = CreateObject("Scripting.Dictionary")

tdic.CompareMode = vbBinaryCompare

pdic.CompareMode = vbBinaryCompare

'load the dictionaries using the overwrite method

fp = Environ("TMP") & Chr(92) & "TempPress"

fn = Dir(fp & Chr(92) & "*.csv", vbNormal)

Do While CBool(Len(fn))

Select Case True

Case LCase(fn) Like "*temperaturen*"

'debug.Print "found " & fn

loadTPDictionary CStr(fp & Chr(92) & fn), tdic, 3

Case LCase(fn) Like "*sps*"

'debug.Print "found " & fn

loadTPDictionary CStr(fp & Chr(92) & fn), pdic, 2

Case Else

'do nothing; not temperature or pressure

End Select

'debug.Print tdic.Count & ":" & pdic.Count

fn = Dir

Loop

'debug.Print tdic.Count

'debug.Print pdic.Count

'At this point you have two dictionary object; one for temps and one for pressures

'They have a unique indexed key on their datetime values

'Time to merge the two

'First load all matching pressures into the temperatures

For Each dt In tdic

If pdic.Exists(dt) Then

tdic.Item(dt) = Array(tdic.Item(dt)(0), tdic.Item(dt)(1), tdic.Item(dt)(2), _

pdic.Item(dt)(1), pdic.Item(dt)(0))

End If

Next dt

'Second, get rid of temps that had no matching pressure

For Each dt In tdic

If UBound(tdic.Item(dt)) < 4 Then

tdic.Remove dt

End If

Next dt

'debug.Print tdic.Count

'debug.Print pdic.Count

'At this point the temp dictionary object contains a merged set of matching temps and pressures

'Time to put the values into one or more worksheets

'create a new target workbook and set up the first target worksheet

Set tpwb = Workbooks.Add

With tpwb

For w = 1 To Int(tdic.Count / iMAXROWS) + 1

a = 1: d = 1

'first load an array with the dictionary's values

ReDim tmp(1 To iMAXROWS, 1 To 5)

For Each dt In tdic

If d > (w * iMAXROWS) Then

Exit For

ElseIf d > ((w - 1) * iMAXROWS) Then

tmp(a, 1) = tdic.Item(dt)(0)

tmp(a, 2) = tdic.Item(dt)(1)

tmp(a, 3) = tdic.Item(dt)(2)

tmp(a, 4) = tdic.Item(dt)(3)

tmp(a, 5) = tdic.Item(dt)(4)

a = a + 1

End If

d = d + 1

Next dt

On Error GoTo bm_Need_Worksheet

With .Worksheets(w + 1) '<~~ ignore the original blank worksheet from the new workbook

'dump the values back into the worksheet

.Cells(2, 1).Resize(UBound(tmp, 1), UBound(tmp, 2)) = tmp

'format the datetimes

.Range("A2:A" & UBound(tmp, 1) + 1 & ",E2:E" & UBound(tmp, 1) + 1).NumberFormat = _

"[Color10]mm/dd/yyyy hh:mm:ss"

.Columns("A:E").AutoFit

End With

'clear the variant array

Erase tmp

Next w

'get rid of the original unprepped worksheet created with the new workbook

.Worksheets(1).Delete

'save as a binary workbook due to size considerations

.SaveAs Filename:=fp & Chr(92) & Format(Date, "\T\P\_yyyymmdd\_") & CLng(Timer), _

FileFormat:=xlExcel12, AddToMru:=True

'close savechanges:=false 'uncomment this after debugging

End With

'we got safely here; skip over worksheet creation

GoTo bm_Safe_Exit

bm_Need_Worksheet:

On Error GoTo 0

With tpwb.Worksheets.Add(After:=Sheets(Sheets.Count))

On Error GoTo bm_Need_Worksheet

.Range("A1:E1") = Array("Date and Time", "Temperature 1", "Temperature 2", _

"Pressure", "Date and Time (p)")

.Name = "Temperaturen & Pressure " & w

With .Parent.Windows(1)

.SplitColumn = 0: .SplitRow = 1

.FreezePanes = True

.Zoom = 75

End With

End With

Resume

bm_Safe_Exit:

'discard the dictionary objects

tdic.RemoveAll: Set tdic = Nothing

pdic.RemoveAll: Set pdic = Nothing

'restore the application environment

appTGGL

End Sub

Sub loadTPDictionary(fpn As String, ByRef dict As Object, flds As Long)

Dim f As Long, v As Long, vVALs As Variant, wb As Workbook

Workbooks.OpenText Filename:=fpn, StartRow:=1, DataType:=xlDelimited, _

ConsecutiveDelimiter:=False, _

Comma:=True, Tab:=False, Semicolon:=False, Space:=False, Other:=False, _

FieldInfo:=IIf(flds = 3, Array(Array(1, 3), Array(2, 1), Array(3, 1)), _

Array(Array(1, 3), Array(2, 1)))

With ActiveWorkbook

With Worksheets(1)

'Debug.Print .Cells(1, 1).Value

vVALs = .Range(.Cells(2, 1), .Cells(Rows.Count, flds).End(xlUp)).Value2

End With

.Close SaveChanges:=False

End With

If flds = 3 Then

For v = LBound(vVALs, 1) To UBound(vVALs, 1)

'fastest load method but overwrites duplicate datetime values with the last temp1, temp2

dict.Item(vVALs(v, 1)) = Array(vVALs(v, 1), vVALs(v, 2), vVALs(v, 3))

Next v

Else

For v = LBound(vVALs, 1) To UBound(vVALs, 1)

'fastest load method but overwrites duplicate datetime values with the last pressure

dict.Item(vVALs(v, 1)) = Array(vVALs(v, 1), vVALs(v, 2))

Next v

End If

Erase vVALs

End Sub

Public Sub appTGGL(Optional bTGGL As Boolean = True)

With Application

.ScreenUpdating = bTGGL

.EnableEvents = bTGGL

.DisplayAlerts = bTGGL

.AutoRecover.Enabled = bTGGL 'no interruptions with an auto-save

.Calculation = IIf(bTGGL, xlCalculationAutomatic, xlCalculationManual)

.CutCopyMode = False

.StatusBar = vbNullString

End With

Debug.Print Timer

End Sub

Refer to the in-code comments to follow the program flow. I seriously advise you to start with a smaller sample data set and work through the code using F8 and ctrl+F8. Set some watches on the vars. I've left many Debug.Print statements in that can be uncommented and their information observed through the VBE's Immediate window.

btw, my default workbooks are created with a single worksheet, not three like the default. You might want to adjust the code to remove everything but a single blank Worksheet Object immediately after the creation of a new target Workbook Object if you still open a new workbook with three blank worksheets. New worksheets are created to receive the data and are formatted on creation appropriately.

Results

While the results were produced quickly enough, I thought ~150K records (~135K after processing) were sufficient for testing. These results were split into multiple worksheets because of the iMAXROWS constant I set at 50K per worksheet.

TP_20160501_65489.xlsb

150K+150K processed into ~140K consolidated records in ~29 seconds.

You might also wish to seriously consider moving to a database solution.

See Highlight Duplicates and Filter by color alternative for pointers on dealing with large worksheets.