I have this code;

static int test = 100;

static int Test

{

get

{

return (int)(test * 0.01f);

}

}

output is : 0

But this code returns different

static int test = 100;

static int Test

{

get

{

var y = (test * 0.01f);

return (int)y;

}

}

output is : 1

Also I have this code

static int test = 100;

static int Test

{

get

{

return (int)(100 * 0.01f);

}

}

output is : 1

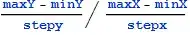

I look at IL output and I dont understand why C# doing this mathematical operation at compile time and output different?

What is difference of this two code? Why I decided to use variable result is changing?