This seems to be because multiplication of small numbers is optimized in CPython 3.5, in a way that left shifts by small numbers are not. Positive left shifts always create a larger integer object to store the result, as part of the calculation, while for multiplications of the sort you used in your test, a special optimization avoids this and creates an integer object of the correct size. This can be seen in the source code of Python's integer implementation.

Because integers in Python are arbitrary-precision, they are stored as arrays of integer "digits", with a limit on the number of bits per integer digit. So in the general case, operations involving integers are not single operations, but instead need to handle the case of multiple "digits". In pyport.h, this bit limit is defined as 30 bits on 64-bit platform, or 15 bits otherwise. (I'll just call this 30 from here on to keep the explanation simple. But note that if you were using Python compiled for 32-bit, your benchmark's result would depend on if x were less than 32,768 or not.)

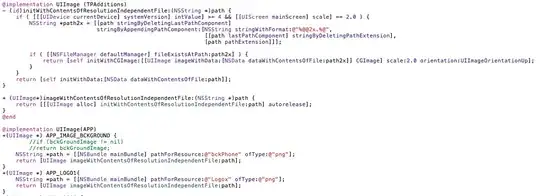

When an operation's inputs and outputs stay within this 30-bit limit, the operation can be handled in an optimized way instead of the general way. The beginning of the integer multiplication implementation is as follows:

static PyObject *

long_mul(PyLongObject *a, PyLongObject *b)

{

PyLongObject *z;

CHECK_BINOP(a, b);

/* fast path for single-digit multiplication */

if (Py_ABS(Py_SIZE(a)) <= 1 && Py_ABS(Py_SIZE(b)) <= 1) {

stwodigits v = (stwodigits)(MEDIUM_VALUE(a)) * MEDIUM_VALUE(b);

#ifdef HAVE_LONG_LONG

return PyLong_FromLongLong((PY_LONG_LONG)v);

#else

/* if we don't have long long then we're almost certainly

using 15-bit digits, so v will fit in a long. In the

unlikely event that we're using 30-bit digits on a platform

without long long, a large v will just cause us to fall

through to the general multiplication code below. */

if (v >= LONG_MIN && v <= LONG_MAX)

return PyLong_FromLong((long)v);

#endif

}

So when multiplying two integers where each fits in a 30-bit digit, this is done as a direct multiplication by the CPython interpreter, instead of working with the integers as arrays. (MEDIUM_VALUE() called on a positive integer object simply gets its first 30-bit digit.) If the result fits in a single 30-bit digit, PyLong_FromLongLong() will notice this in a relatively small number of operations, and create a single-digit integer object to store it.

In contrast, left shifts are not optimized this way, and every left shift deals with the integer being shifted as an array. In particular, if you look at the source code for long_lshift(), in the case of a small but positive left shift, a 2-digit integer object is always created, if only to have its length truncated to 1 later: (my comments in /*** ***/)

static PyObject *

long_lshift(PyObject *v, PyObject *w)

{

/*** ... ***/

wordshift = shiftby / PyLong_SHIFT; /*** zero for small w ***/

remshift = shiftby - wordshift * PyLong_SHIFT; /*** w for small w ***/

oldsize = Py_ABS(Py_SIZE(a)); /*** 1 for small v > 0 ***/

newsize = oldsize + wordshift;

if (remshift)

++newsize; /*** here newsize becomes at least 2 for w > 0, v > 0 ***/

z = _PyLong_New(newsize);

/*** ... ***/

}

Integer division

You didn't ask about the worse performance of integer floor division compared to right shifts, because that fit your (and my) expectations. But dividing a small positive number by another small positive number is not as optimized as small multiplications, either. Every // computes both the quotient and the remainder using the function long_divrem(). This remainder is computed for a small divisor with a multiplication, and is stored in a newly-allocated integer object, which in this situation is immediately discarded.

Or at least, that was the case when this question was originally asked. In CPython 3.6, a fast path for small int // was added, so // now beats >> for small ints too.