I have the following PHP script, which executes a MySQL-Query.

$sSql = "SELECT DISTINCT t1.* "

. "FROM import_data t1 "

. "INNER JOIN import_profiles imp on t1.profile_id = imp.id "

. "WHERE imp.creditornr = " . $vendor . " "

. "AND t1.vendor = '" . $k . "' "

. "AND t1.importRun = (SELECT MAX(importRun) AS importRun

FROM import_data

WHERE sku=t1.sku

AND profile_id = t1.profile_id)";

In native SQL, a query looks like this:

SELECT DISTINCT t1.*

FROM import_data t1

INNER JOIN import_profiles imp on t1.profile_id = imp.id

WHERE imp.creditornr = 73329

AND t1.vendor = 'rackmountit'

AND t1.importRun = (SELECT MAX(importRun) AS importRun

FROM import_data

WHERE sku=t1.sku

AND profile_id = t1.profile_id)

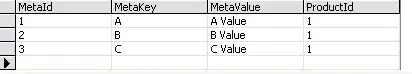

This is the explain of one of those queries: (I run > 10 of those) and the DB currently has ~100.000 entries (rising).

Also, those are the tables which are used in this statements:

I have no idea how, since I'm not that good in mysql, but are there any ideas how to increase the performance of those (sub)queries? Currently they are running > 1:00 and I need to optimize it.

Thanks and let me know, if you need further information.

EDIT 1 Added the CREATE TABLE statements

SET NAMES utf8;

SET time_zone = '+00:00';

CREATE TABLE `import_data` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`profile_id` int(11) NOT NULL,

`sku` varchar(255) NOT NULL,

`vendor` varchar(255) NOT NULL,

`evp` double NOT NULL,

`ek` double NOT NULL,

`articletext1` text NOT NULL,

`articletext2` text NOT NULL,

`ean` text NOT NULL,

`stock` int(11) NOT NULL,

`zolltarif` int(11) NOT NULL,

`tstamp` date NOT NULL,

`importRun` int(11) NOT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1;

CREATE TABLE `import_profiles` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) NOT NULL,

`creditornr` int(6) NOT NULL,

`structure` text NOT NULL,

`updatetime` datetime NOT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1 COMMENT='Import-Profile für Vorlieferanten';