I am learning and experimenting with neural networks and would like to have the opinion from someone more experienced on the following issue:

When I train an Autoencoder in Keras ('mean_squared_error' loss function and SGD optimizer), the validation loss is gradually going down. and the validation accuracy is going up. So far so good.

However, after a while, the loss keeps decreasing but the accuracy suddenly falls back to a much lower low level.

- Is it 'normal' or expected behavior that the accuracy goes up very fast and stay high to fall suddenly back?

- Should I stop training at the maximum accuracy even if the validation loss is still decreasing? In other words, use val_acc or val_loss as metric to monitor for early stopping?

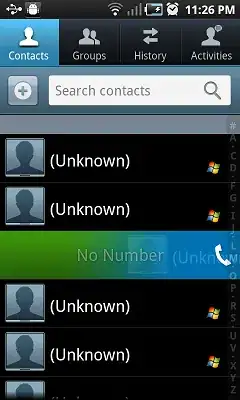

See images:

Loss: (green = val, blue = train]

Accuracy: (green = val, blue = train]

UPDATE: The comments below pointed me in the right direction and I think I understand it better now. It would be nice if someone could confirm that following is correct:

the accuracy metric measures the % of y_pred==Y_true and thus only make sense for classification.

my data is a combination of real and binary features. The reason why the accuracy graph goes up very steep and then falls back, while the loss continues to decrease is because around epoch 5000, the network probably predicted +/- 50% of the binary features correctly. When training continues, around epoch 12000, the prediction of real and binary features together improved, hence the decreasing loss, but the prediction of the binary features alone, are a little less correct. Therefor the accuracy falls down, while the loss decreases.