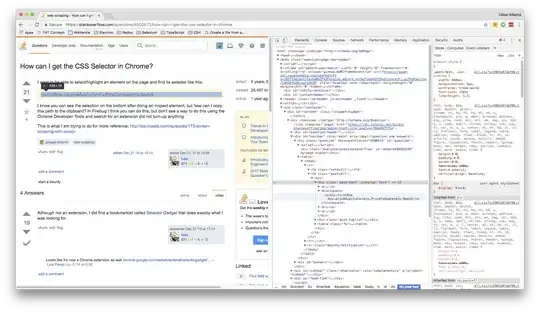

I'd like to create two images from a source RAW image, a Canon CR2 in this case. I've got the RAW conversion sorted and some of the processing. My final images need to be a PNG with an alpha mask and a 95% quality JPG with the alpha area instead filled with black. I've got a test image set here showing how far I've got with detecting the subject:

https://i.stack.imgur.com/zaAx4.jpg

So basically, as you can see I want to isolate the subjects from the grey background. I also want to mask out any shadows cast on the grey background as much as possible and ideally in entirety. I'm using a Python2 script I've written and so far mostly scikit-image. I would swap to another Python compatible image processing lib if required. Also, I need to do all steps in memory so that I only save out once at the end of all image processing with the PNG and then the JPG. So no subprocess.Popen etc..

You'll see from the sample images that, I think at least, that I've got some way to a solution already. I've used scikit-image and its Canny edge algorithm for the images you see in my examples.

What I need to do now is figure out how to fill the subject in the Canny images with white so that I can get a proper solid white mask. In most of my example images, with Canny filter applied, it appears that there is good edge detection for the subjects themselves, usually with a major unbroken border. But, I'm guessing I might get some images in the future where this does not happen and there may be small breaks in the major border. I need to handle this occurrence if it looks like it'll be an issue for later processing steps.

Also, I'm wondering if I need to increase the overall border by one pixel and set it to the same color as my 0,0 pixel (i.e. first pixel top/left in background) and then run my Canny filter and then shrink my border by 1px again? This should allow for the bottom edge to be detected and for when subjects break the top or the sides of the frame?

So really I'm just looking for advice and wondering where to go next to get a nice solid mask. It needs to stay binary as a binary mask, (i.e. everything outside of the main subject needs to be totally masked to 0). This means that I'll need to run something that looks for isolated islands of pixels below a certain pixel volume at some point - probably last step and add them to the mask (e.g. 50px or so).

Also, overall, the rule of thumb would be that it's better if a little bit of the subject gets masked rather than less of the background being masked (i.e. I want all or as much as possible of the background/shadow areas to be masked.)

I've tried a few things, but not quite getting there. I'm thinking something along the lines of find_contours in sci_kit might help. But I can't quite see from the scikit-image examples how I'd pick and then turn my detected contour into a mask. I've killed quite a bit of time experimenting without success today and so I thought I'd ask here and see if anyone has any better ideas.

This is an OpenCV based method that looks promising:

I'd like to stick with scikit-image or some other interchangeable numpty image library for python if possible. However, if it's just easier and faster with OpenCV or another library then I'm open to ideas so long as I can stick with Python.

Also worth bearing in mind that for my application I will always have an image of the background without a subject. So maybe I should be pursuing this route. Problem is that I don't think a simple difference approach deals very well with shadows. It seems to me like some sort of edge detection is required at some point for a superior masking approach.

"Source 1"

"Source 1"

"Source 2"

"Source 2"

"Source 3"

"Source 3"

"Result 1"

"Result 1"

"Result 2"

"Result 2"

"Result 3"

"Result 3"