I have mysql database and I want to insert about 40'000 rows into it from PHP code , but my code takes more than 15 minutes to insert the rows, is there any chances to optimize it? where is my problem(PHP code / database design) ?

here are the details:

- the row data are stored in a utf-8 txt file the values separated by "\t" tab character and every row sets in one line of the file, like this

string view:

"value1\tvalue2\tvalue3\value4\value5\r\nvalue1\tvalue2\tvalue3\value4\value5\r\nvalue1\tvalue2\tvalue3\value4\value5\r\nvalue1\tvalue2\tvalue3\value4\value5\r\n"

text reader view:

value1 value2 value3 value4 value5

value1 value2 value3 value4 value5

value1 value2 value3 value4 value5

value1 value2 value3 value4 value5

-the data base has 3 tables as this:

table1 countries fields(1) (NAME varchar -primarykey-)

table2 products fields(2) (HS varchar - primarykey-, NAME varchar)

table3 imports fields (6) (product_hs varchar -foreignkey->products(HS),

counteryname varchar - foreignkey->countries (NAME),

year year,

units int,

weight int,

value int)

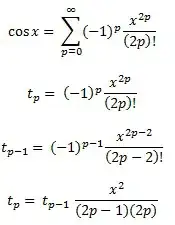

- php code was like this

$conn = new mysqli($hn,$un,$pw,$db);

if($conn->connect_error) {die($conn->connect_error);}

$x = 0; // row counter

ini_set('max_execution_time', 3000);

while(!feof($filehandle)){

$x++;

echo $x . ": ";

$fileline = fgets($filehandle);

$fields = explode("\t", $fileline);

$query = "INSERT INTO imports(product_hs,counteryname,year,units,weight,value) VALUES(" . "'" . $fields[0] ."','". $fields[1] . "','2014','". $fields[2] . "','" . $fields[3] . "','" . $fields[4] . "');";

$result = $conn->query($query);

if(!$result) {

echo $conn->error . "</br>";

}else{

echo $result . "</br>";

}

};

first I thought it is an index problem that slows down the insertion , so I removed all the indexes from "imports" table , but it didn't go faster!! is the problem from the database design or from my php code?

also note that the browser is notifying "waiting for response from the server" for the first 5 minutes then most of the remaining time is notifying "transferring data from server", is this because the response html has more than 40'000 line for the row counter1:1 </br> 2:1 </br> .....(declared in the php code)?

please consider I'm very newbie, thanks.