Goal: Implement the diagram shown below in OpenCL. The main thing needed from the OpenCl kernel is to multiply the coefficient array and temp array and then accumilate all those values into one at the end. (That is probably the most time intensive operation, parallelism would be really helpful here).

I am using a helper function for the kernel that does the multiplication and addition (I am hoping this function will be parallel as well).

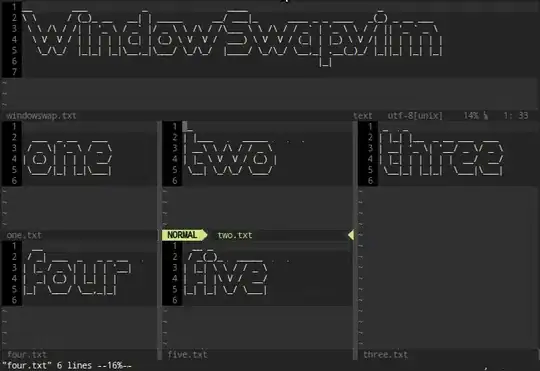

Description of the picture:

One at a time, the values are passed into the array (temp array) which is the same size as the coefficient array. Now every time a single value is passed into this array, the temp array is multiplied with the coefficient array in parallel and the values of each index are then concatenated into one single element. This will continue until the input array reaches it's final element.

What happens with my code?

For 60 elements from the input, it takes over 8000 ms!! and I have a total of 1.2 million inputs that still have to be passed in. I know for a fact that there is a way better solution to do what I am attempting. Here is my code below.

Here are some things that I know are wrong with he code for sure. When I try to multiply the coefficient values with the temp array, it crashes. This is because of the global_id. All I want this line to do is simply multiply the two arrays in parallel.

I tried to figure out why it was taking so long to do the FIFO function, so I started commenting lines out. I first started by commenting everything except the first for loop of the FIFO function. As a result this took 50 ms. Then when I uncommented the next loop, it jumped to 8000ms. So the delay would have to do with the transfer of data.

Is there a register shift that I could use in OpenCl? Perhaps use some logical shifting method for integer arrays? (I know there is a '>>' operator).

float constant temp[58];

float constant tempArrayForShift[58];

float constant multipliedResult[58];

float fifo(float inputValue, float *coefficients, int sizeOfCoeff) {

//take array of 58 elements (or same size as number of coefficients)

//shift all elements to the right one

//bring next element into index 0 from input

//multiply the coefficient array with the array thats the same size of coefficients and accumilate

//store into one output value of the output array

//repeat till input array has reached the end

int globalId = get_global_id(0);

float output = 0.0f;

//Shift everything down from 1 to 57

//takes about 50ms here

for(int i=1; i<58; i++){

tempArrayForShift[i] = temp[i];

}

//Input the new value passed from main kernel. Rest of values were shifted over so element is written at index 0.

tempArrayForShift[0] = inputValue;

//Takes about 8000ms with this loop included

//Write values back into temp array

for(int i=0; i<58; i++){

temp[i] = tempArrayForShift[i];

}

//all 58 elements of the coefficient array and temp array are multiplied at the same time and stored in a new array

//I am 100% sure this line is crashing the program.

//multipliedResult[globalId] = coefficients[globalId] * temp[globalId];

//Sum the temp array with each other. Temp array consists of coefficients*fifo buffer

for (int i = 0; i < 58; i ++) {

// output = multipliedResult[i] + output;

}

//Returned summed value of temp array

return output;

}

__kernel void lowpass(__global float *Array, __global float *coefficients, __global float *Output) {

//Initialize the temporary array values to 0

for (int i = 0; i < 58; i ++) {

temp[i] = 0;

tempArrayForShift[i] = 0;

multipliedResult[i] = 0;

}

//fifo adds one element in and calls the fifo function. ALL I NEED TO DO IS SEND ONE VALUE AT A TIME HERE.

for (int i = 0; i < 60; i ++) {

Output[i] = fifo(Array[i], coefficients, 58);

}

}

I have had this problem with OpenCl for a long time. I am not sure how to implement parallel and sequential instructions together.

Another alternative I was thinking about

In the main cpp file, I was thinking of implementing the fifo buffer there and having the kernel do the multiplication and addition. But this would mean I would have to call the kernel 1000+ times in a loop. Would this be the better solution? Or would it just be completely inefficient.