i'm trying to implement image morphology operator in c++ using openMP and openCV. The algorithm works fine but, when i get the profiling result taken with VTune,i observe that the parallelized method take more time than sequential method,and it's caused by .at() openCv function. why? how i can solve it?

here's my code:

Mat Morph_op_manager::compute_morph_base_op(Mat image, bool parallel, int type) {

//strel attribute

int strel_rows = 5;

int strel_cols = 5;

//strel center coordinate

int cr = 2;

int cc = 2;

//number of row and column after strel center

int nrac = strel_rows - cr ;

int ncac = strel_cols - cr ;

//strel init

Mat strel(strel_rows,strel_cols,CV_8UC1, Scalar(0));

Mat op_result = image.clone();

if (parallel == false)

omp_set_num_threads(1); // Use 1 threads for all consecutive parallel regions

//parallelized nested loop

#pragma omp parallel for collapse(4)

for (int i= cr ; i<image.rows-nrac; i++)

for (int j = cc; j < image.cols -ncac; j++) {

for (int m = 0; m < strel_rows; m++)

for (int n = 0; n < strel_cols; n++) {

// if type = 0 -> erode

if (type == 0){

if (image.at<uchar>(i-(strel_rows-m),j-(strel_cols-n)) != strel.at<uchar>(m,n)){

op_result.at<uchar>(i, j) = 255;

}

}

// if type == 0 -> dilate

if (type == 1){

if (image.at<uchar>(i-(strel_rows-m),j-(strel_cols-n)) == strel.at<uchar>(m,n)){

op_result.at<uchar>(i, j) = 0;

}

}

}

}

}

here's the profiling result:

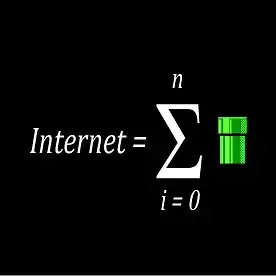

SPEED-UP:

instead use the **.at()**method i access to the matrix of pixels using pointer, and i change my directive as described in the code below.

A problem persist: in my profiling log Mat ::.release() spend a lot of time why? how i can solve it?

speed-up code:

omp_set_num_threads(4);

double start_time = omp_get_wtime();

#pragma omp parallel for shared(strel,image,op_result,strel_el_count) private(i,j) schedule(dynamic) if(parallel == true)

for( i = cr; i < image.rows-nrac; i++)

{

op_result.addref();

uchar* opresult_ptr = op_result.ptr<uchar>(i);

for ( j = cc; j < image.cols-ncac; j++)

{

//type == 0 --> erode

if (type == 0 ){

if(is_fullfit(image,i,j,strel,strel_el_count,parallel)){

opresult_ptr[j] = 0;

}

else

opresult_ptr[j] = 255;

}

}

}

here's the fullfit function

bool Morph_op_manager::is_fullfit(Mat image,int i,int j,Mat strel,int strel_counter,bool parallel){

int mask_counter = 0;

int ii=0;

int jj=0;

for ( ii = 0; ii <strel.rows ; ii++) {

uchar* strel_ptr = strel.ptr<uchar>(ii);

uchar* image_ptr = image.ptr<uchar>(i - (strel.rows - ii));

for ( jj = 0; jj <strel.cols ; ++jj) {

mask_counter += (int) image_ptr[j-(strel.cols-jj)];

}

}

return mask_counter == strel_counter;

}

cpu data: CPU Name: 4th generation Intel(R) Core(TM) Processor family Frequency: 2.4 GHz Logical CPU Count: 4

here's my log: