There are some fundamental differences between Mystical's answer and your code.

Difference #1

Your code creates a chunk of work for each CPU, and lets it run to completion. This means that once a thread has finished, there will be a sharp drop in the CPU usage since a CPU will be idle while the other threads run to completion. This happens because scheduling is not always fair. One thread may progress, and finish, much faster than the others.

The OpenMP solution solves this by declaring schedule(dynamic) which tells OpenMP to, internally, create a work queue that all the threads will consume work from. When a chunk of work is finished, the thread that would have then exited in your code consumes another chunk of work and gets busy with it.

Eventually, this becomes a balancing act of picking adequately sized chunks. Too large, and the CPUs may not be maxed out toward the end of the task. Too small, and there can be significant overhead.

Difference #2

You are writing to a variable, primes that is shared between all of the threads.

This has 2 consequences:

- It requires synchronization to keep prevent a data race.

- It makes the cache on a modern CPU very unhappy since a cache flush is required before writes on one thread are visible to another thread.

The OpenMP solution solves this by reducing, via operator+(), the result of the individual values of primes each thread held into the final result. This is what reduction(+ : primes) does.

With this knowledge of how OpenMP is splitting up, scheduling the work, and combining the results, we can modify your code to behave similarly.

#include <iostream>

#include <thread>

#include <vector>

#include <utility>

#include <algorithm>

#include <functional>

#include <mutex>

#include <future>

using namespace std;

int prime(int a, int b)

{

int primes = 0;

for (a; a <= b; a++) {

int i = 2;

while (i <= a) {

if (a % i == 0)

break;

i++;

}

if (i == a) {

primes++;

}

}

return primes;

}

int workConsumingPrime(vector<pair<int, int>>& workQueue, mutex& workMutex)

{

int primes = 0;

unique_lock<mutex> workLock(workMutex);

while (!workQueue.empty()) {

pair<int, int> work = workQueue.back();

workQueue.pop_back();

workLock.unlock(); //< Don't hold the mutex while we do our work.

primes += prime(work.first, work.second);

workLock.lock();

}

return primes;

}

int main()

{

int nthreads = thread::hardware_concurrency();

int limit = 1000000;

// A place to put work to be consumed, and a synchronisation object to protect it.

vector<pair<int, int>> workQueue;

mutex workMutex;

// Put all of the ranges into a queue for the threads to consume.

int chunkSize = max(limit / (nthreads*16), 10); //< Handwaving came picking 16 and a good factor.

for (int i = 0; i < limit; i += chunkSize) {

workQueue.push_back(make_pair(i, min(limit, i + chunkSize)));

}

// Start the threads.

vector<future<int>> futures;

for (int i = 0; i < nthreads; ++i) {

packaged_task<int()> task(bind(workConsumingPrime, ref(workQueue), ref(workMutex)));

futures.push_back(task.get_future());

thread(move(task)).detach();

}

cout << "Number of logical cores: " << nthreads << "\n";

cout << "Calculating number of primes less than " << limit << "... \n";

// Sum up all the results.

int primes = 0;

for (future<int>& f : futures) {

primes += f.get();

}

cout << "There are " << primes << " prime numbers less than " << limit << ".\n";

}

This is still not a perfect reproduction of how the OpenMP example behaves. For example, this is closer to OpenMP's static schedule since chunks of work are a fixed size. Also, OpenMP does not use a work queue at all. So I may have lied a little bit -- call it a white lie since I wanted to be more explicit about showing the work being split up. What it is likely doing behind the scenes is storing the iteration that the next thread should start at when it comes available and a heuristic for the next chunk size.

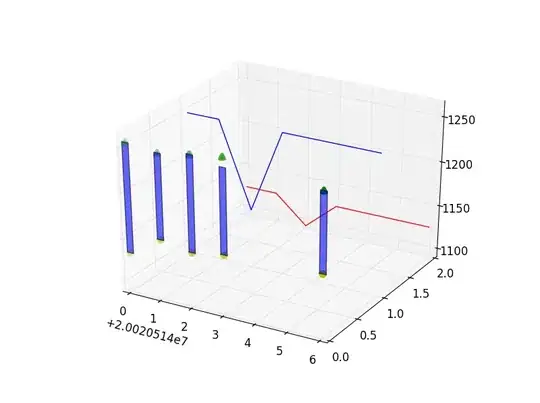

Even with these differences, I'm able to max out all my CPUs for an extended period of time.

Looking to the future...

You probably noticed that the OpenMP version is a lot more readable. This is because it's meant to solve problems just like this. So, when we try to solve them without a library or compiler extension, we end up reinventing the wheel. Luckily, there is a lot of work being done to bring this sort of functionality directly into C++. Specifically, the Parallelism TS can help us out if we could represent this as a standard C++ algorithm. Then we could tell the library to distribute the algorithm across all CPUs as it sees fit so it does all the heavy lifting for us.

In C++11, with a little bit of help from Boost, this algorithm could be written as:

#include <iostream>

#include <iterator>

#include <algorithm>

#include <boost/range/irange.hpp>

using namespace std;

bool isPrime(int n)

{

if (n < 2)

return false;

for (int i = 2; i < n; ++i) {

if (n % i == 0)

return false;

}

return true;

}

int main()

{

auto range = boost::irange(0, 1000001);

auto numPrimes = count_if(begin(range), end(range), isPrime);

cout << "There are " << numPrimes << " prime numbers less than " << range.back() << ".\n";

}

And to parallelise the algorithm, you just need to #include <execution_policy> and pass std::par as the first parameter to count_if.

auto numPrimes = count_if(par, begin(range), end(range), isPrime);

And that's the kind of code that makes me happy to read.

Note: Absolutely no time was spent optimising this algorithm at all. If we were to do any optimisation, I'd look into something like the the Sieve of Eratosthenes which uses previous prime computations to help with future ones.