The problem

I have a RabbitMQ Server that serves as a queue hub for one of my systems. In the last week or so, its producers come to a complete halt every few hours.

What have I tried

Brute force

- Stopping the consumers releases the lock for a few minutes, but then blocking returns.

- Restarting RabbitMQ solved the problem for a few hours.

- I have some automatic script that does the ugly restarts, but it's obviously far from a proper solution.

Allocating more memory

Following cantSleepNow's answer, I have increased the memory allocated to RabbitMQ to 90%. The server has a whopping 16GB of memory and the message count is not very high (millions per day), so that does not seem to be the problem.

From the command line:

sudo rabbitmqctl set_vm_memory_high_watermark 0.9

And with /etc/rabbitmq/rabbitmq.config:

[

{rabbit,

[

{loopback_users, []},

{vm_memory_high_watermark, 0.9}

]

}

].

Code & Design

I use Python for all consumers and producers.

Producers

The producers are API server that serve calls. Whenever a call arrives, a connection is opened, a message is sent and the connection is closed.

from kombu import Connection

def send_message_to_queue(host, port, queue_name, message):

"""Sends a single message to the queue."""

with Connection('amqp://guest:guest@%s:%s//' % (host, port)) as conn:

simple_queue = conn.SimpleQueue(name=queue_name, no_ack=True)

simple_queue.put(message)

simple_queue.close()

Consumers

The consumers slightly differ from each other, but generally use the following pattern - opening a connection, and waiting on it until a message arrives. The connection can stay opened for long period of times (say, days).

with Connection('amqp://whatever:whatever@whatever:whatever//') as conn:

while True:

queue = conn.SimpleQueue(queue_name)

message = queue.get(block=True)

message.ack()

Design reasoning

- Consumers always need to keep an open connection with the queue server

- The Producer session should only live during the lifespan of the API call

This design had caused no problems till about one week ago.

Web view dashboard

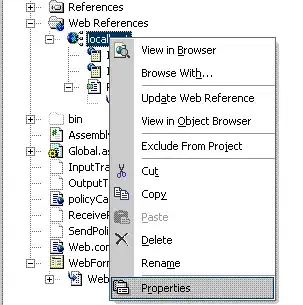

The web console shows that the consumers in 127.0.0.1 and 172.31.38.50 block the consumers from 172.31.38.50, 172.31.39.120, 172.31.41.38 and 172.31.41.38.

System metrics

Just to be on the safe side, I checked the server load. As expected, the load average and CPU utilization metrics are low.

Why does the rabbit MQ each such a deadlock?