It seems like every single time I go to write any code that deals with fetch, push, read, or write operations, the entire swath of code is ad hoc, ugly, and completely unusable outside the context of that exact application. Worse yet, it feels like I have to reinvent the wheel every time I design these things. It seems to me that the nature of I/O operations is very linear, and not well suited to modular or object-oriented patterns.

I'm really hoping somebody can tell me I'm wrong here. Are there techniques/patterns for object-oriented or modular file and data I/O? Is there some convention I can follow to add some code re-usability? I know that various tools exist to make reading individual files easier, like XML parser and the like, but I'm referring to the larger designs which use those tools.

The problem isn't limited to a single language; I hit the same wall in Java, C, Matlab, Python, and others.

A sub-topic of this is addressed by this question about what object should invoke saving. That question seems to refer to a factory pattern, where a file's contents are built up, then finally written to disk. My question is about the overall architecture, including factories for write operations, but also (Insert Pattern Here) for reading/fetching operations.

The best thing I've been able to come up with is a facade pattern... but holy smokes is the code in those facades ugly.

Somebody please tell me a pattern exists wherein I could either re-use some of my code, or at least follow a template for future read-writes.

Somebody asked about Modular Design here, but the answers are specific to that asker's problem, and aren't altogether useful.

Example

This is only an example, and is based on a project I did last year. Feel free to provide a different example.

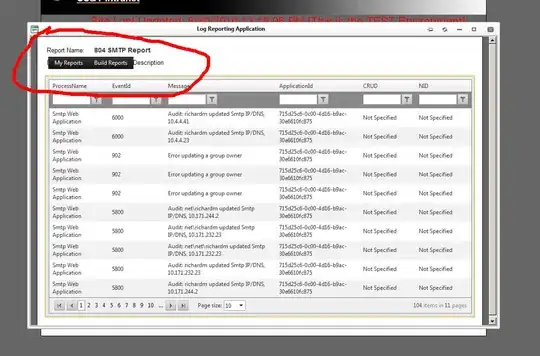

Our program is a physics sandbox. We want to load XML data which describes physical attributes of objects in that sandbox. We also need to load .3DS files which contain 3D rendering information. Finally, we need to query an SQL database to find out who owns what objects.

We also need to be able to support 3D model formats when they come out. We don't know what those files will look like yet, but we want to set up the code framework in advance. That way, once we get the new data schema, the loading routine can be implemented quickly.

Data from all 3 sources would be used to create instances of objects in our software.

Later, we need to save physics information, like position and velocity, to a database, and also save custom texture information to local files. We don't know what type of file the texture will be, so we just want to lay out the code structure so we can drop in the saving code later.

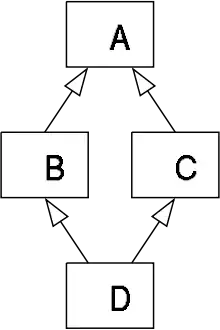

Without some sort of design pattern, even a small number of objects quickly leads to a closely-coupled network.

A facade can decouple the objects/data from the corresponding files, but all that does is centralize the problem within the input and output facades, which can turn into a nightmarish mess in a hurry. Moreover, the objects/data are now closely coupled to the facade, so no modularity is really gained.

Edited from 3 weeks ago...

Previously, I had provided a bunch of psuedocode for the issue I was facing when I first asked this question, but I have since decided it obfuscated my main question. Suffice to say: I had to use about 2000 lines of really wonky code for that particular set of read operations, it did remarkably little in terms of processing and organization, and I'll never be able to use any of it on another project again.

I would like to avoid writing such code in the future.