I am clustering timeseries data using appropriate distance measures and clustering algorithms for longitudinal data. My goal is to validate the optimal number of clusters for this dataset, through cluster result statistics. I read a number of articles and posts on stackoverflow on this subject, particularly: Determining the Optimal Number of Clusters. Visual inspection is only possible on a subset of my data; I cannot rely on it to be representative of my whole dataset since I am dealing with big data.

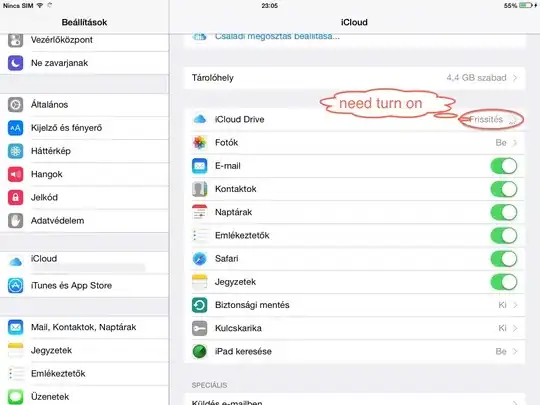

My approach is the following: 1. I cluster several times using different numbers of clusters and calculate the cluster statistics for each of these options 2. I calculate the cluster statistic metrics using FPC's cluster.stats R package: Cluster.Stats from FPC Cran Package. I plot these and decide for each metric which is the best cluster number (see my code below).

My problem is that these metrics each evaluate a different aspect of the clustering "goodness", and the best number of clusters for one metric may not coincide with the best number of clusters of a different metric. For example, Dunn's index may point towards using 3 clusters, while the within-sum of squares may indicate that 75 clusters is a better choice.

I understand the basics: that distances between points within a cluster should be small, that clusters should have a good separation from each other, that the sum of squares should be minimized, that observations which are in different clusters should have a large dissimilarity / different clusters should ideally have a strong dissimilarity. However, I do not know which of these metrics is most important to consider in evaluating cluster quality.

How do I approach this problem, keeping in mind the nature of my data (timeseries) and the goal to cluster identical series / series with strongly similar pattern regions together?

Am I approaching the clustering problem the right way, or am I missing a crucial step? Or am I misunderstanding how to use these statistics?

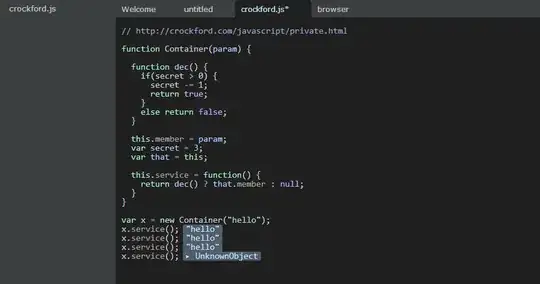

Here is how I am deciding the best number of clusters using the statistics: cs_metrics is my dataframe which contains the statistics.

Average.within.best <- cs_metrics$cluster.number[which.min(cs_metrics$average.within)]

Average.between.best <- cs_metrics$cluster.number[which.max(cs_metrics$average.between)]

Avg.silwidth.best <- cs_metrics$cluster.number[which.max(cs_metrics$avg.silwidth)]

Calinsky.best <- cs_metrics$cluster.number[which.max(cs_metrics$ch)]

Dunn.best <- cs_metrics$cluster.number[which.max(cs_metrics$dunn)]

Dunn2.best <- cs_metrics$cluster.number[which.max(cs_metrics$dunn2)]

Entropy.best <- cs_metrics$cluster.number[which.min(cs_metrics$entropy)]

Pearsongamma.best <- cs_metrics$cluster.number[which.max(cs_metrics$pearsongamma)]

Within.SS.best <- cs_metrics$cluster.number[which.min(cs_metrics$within.cluster.ss)]

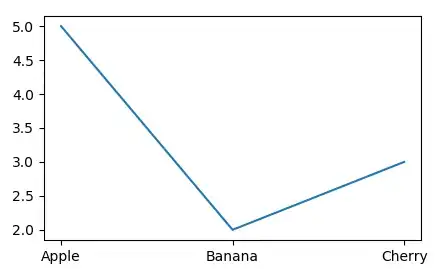

Here are the plots that compare the cluster statistics for the different numbers of clusters: