My app uses an external USB microphone with a very accurate thermo compensated quartz oscillator (TCXO). The sample rate is 48KHz. I plug it in iOS through the camera kit connector. I'm using EZAudio library and everything works fine except that iOS seems to keep its own internal clock source for audio sampling instead of my accurate 48KHz.

I read all the documentation about CoreAudio but I didn't find anything related to clock source when using lightning audio.

Is there any way to choose between internal or external clock source ?

Thanks !

var audioFormatIn = AudioStreamBasicDescription(mSampleRate: Float64(48000),

mFormatID: AudioFormatID(kAudioFormatLinearPCM),

mFormatFlags: AudioFormatFlags(kAudioFormatFlagIsSignedInteger | kAudioFormatFlagIsPacked),

mBytesPerPacket: 2,

mFramesPerPacket: 1,

mBytesPerFrame: 2,

mChannelsPerFrame: 1,

mBitsPerChannel: 16,

mReserved: 0)

func initAudio()

{

let session : AVAudioSession = AVAudioSession.sharedInstance()

do {

try session.setCategory(AVAudioSessionCategoryPlayAndRecord)

try session.setMode(AVAudioSessionModeMeasurement)

try session.setActive(true)

}

catch {

print("Error Audio")

}

self.microphone = EZMicrophone(microphoneDelegate: self, withAudioStreamBasicDescription: audioFormatIn)

}

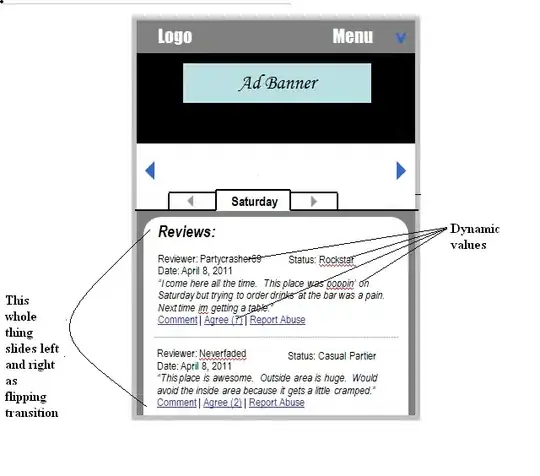

UPDATE : Thanks to @Rhythmic Fistman, setting the preferred sample rate partly solved the problem. No more resampling from iOS and the TCXO remains the clock master source. But the signal is now quickly corrupted with something that seems to be empty samples in the buffers. This problem is getting worse and worse with the recording length. Of course, as I need the lightning port to plug the hardware, it's really difficult for me to debug easily!

Screenshot of the waveform after 7 minutes :

Screenshot of the waveform after 15 minutes :