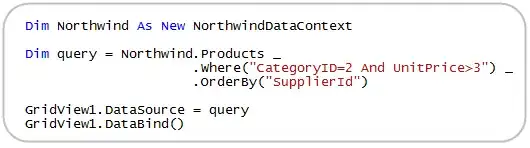

I am trying to blend two images together after warping them. The problem, is that the images are not the same sizes. I have tried this to make the blend work:

#warped_image and image_2 are parameters, warped_image is always larger

output_image = np.copy(warped_image)

topBottom = (warped_image.shape[0] - image_2.shape[0]) // 2

leftRight = (warped_image.shape[1] - image_2.shape[1]) // 2

if warped_image.shape[0] % 2 != 0:

warped_image = warped_image[0: warped_image.shape[0] - 1, 0: warped_image.shape[1] - 1]

image_2 = cv2.copyMakeBorder(image_2, topBottom, topBottom, leftRight, leftRight, cv2.BORDER_CONSTANT, value=[0,0,0])

while image_2.shape[0] != warped_image.shape[0]:

image_2 = cv2.copyMakeBorder(image_2, 1, 1, 1, 1, cv2.BORDER_CONSTANT,

value=[0, 0, 0])

cv2.addWeighted(warped_image, .5, image_2, .5, 0.0, output_image)

The problem is that the blend is inaccurate on the first attempt, and if I try to blend a already blended image with another one, the image becomes completely distorted.

Is there a better way to blend two images together at a specific point?

Edit Results:

Two images:

Blending a third to the first two:

The second image is actually too large to load in here. Its end size is 62521 × 4111, which is incredibly large, since the images are all 3200 × 2368