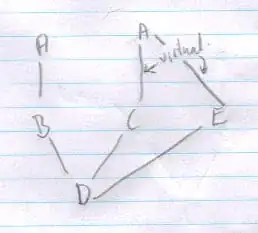

I am working on a reinforcement learning task and decided to use keras NN model for Q value approximation. The approach is common: after each action the reward is stored in a memory replay array, then I take random sample from it and fit the model with new data state-action => reward+predicted_Q(more details here). In order to do the training the Q value has to be predicted for each item in the training set.

The script is running very slow so I started investigating. Profiling shows that 56,87% of cumulative time is taken by _predict_loop method:

And it looks strange, cause prediction is just a one-way propagation. Just a one-time multiplication of set of numbers. The model I am using is very simple: 8 inputs, 5 nodes on hidden layer, 1 output.

And it looks strange, cause prediction is just a one-way propagation. Just a one-time multiplication of set of numbers. The model I am using is very simple: 8 inputs, 5 nodes on hidden layer, 1 output.

I have installed and configured CUDA, run few example tests and it shows that GPU is used, also I can see huge load of GPU. When I run my code - there is a message: "Using gpu device 0: GeForce GT 730" but I can see that GPU load is really low(about 10%).

Is it normal for predict function to take so much time? Is there a way to use GPU for this computation?