I need to do fairly sensitive color (brightness) measurements in webcam footage, using OpenCV. The problem I am experiencing is that the ambient light fluctuates, which makes it hard to get accurate results. I'm looking for a way to continuously update sequential frames of the video to smooth out the global lighting differences. The light changes I'm trying to filter out occur globally in most or all of the image. I have tried to calculate a difference and subtract that, but with little luck. Does anyone have any advice on how to approach this problem?

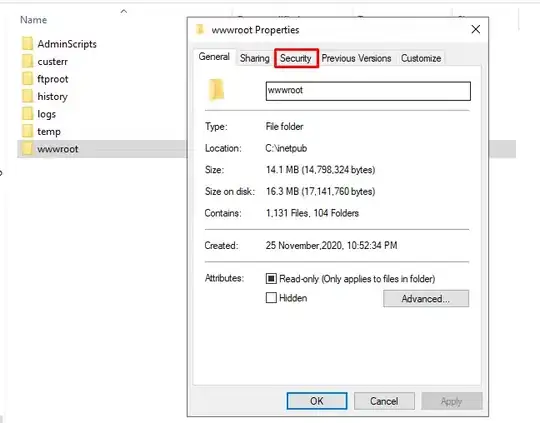

EDIT: The 2 images below are from the same video, with color changes slightly magnified. If you alternate between them, you'll see that there's slight changes in lighting, probably due to clouds shifting outside. The problem is that these changes obscure any other color changes I might want to detect.

So I would like to filter out these particular changes. Given that I only need part of the frames I capture, I figured that it should be possible to filter out the lighting changes as they occur in the rest of the footage as well. Outside of my area of interest.

I have tried to capture the dominant frequencies in the changes using dft, to simply ignore changes in lighting. But I am not familiar enough with the use of that function. I have only been using opencv for a week, so I am still learning.