Imagine you are reading millions of data rows from a CSV file. Each line shows the sensor-name, the current sensor-value and the timestamp when that value was observed.

key, value, timestamp

temp_x, 8°C, 10:52am

temp_x, 25°C, 11:02am

temp_x, 30°C, 11:12am

This relates to a signal like this:

So I wonder what's the best and most efficient way to store that into Apache Hadoop HDFS. First idea is using BigTable aka HBase. Here the signal name is the row-key while the value is a column-group that saves the values over time. One could add more column-groups (as for instance statistics) to that row-key.

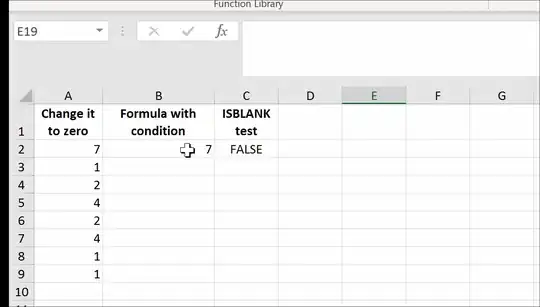

Another idea is using a tabular (or SQL like) structure. But then you replicate the key in each row. And you have to calculate statistics on demand and store them separately (here into a second table).

I wonder if there is any better idea. Once stored, I want to read that data in Python/PySpark and do data analytics and machine learning. Therefore the data should be easily accessible using a schema (Spark RDD).