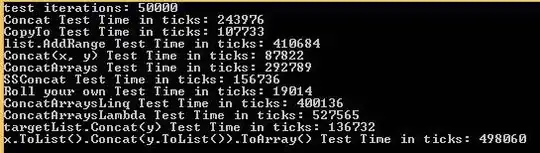

I decided to add another answer with timings for different methods and different dtypes - it would be too long for one answer...

Timings against 1M rows DF for the following dtypes: int32, int64, float64, object (string):

In [207]: result

Out[207]:

int32 int64 float string

method

df[~np.in1d(df.col, excl)] 249 271 307 2420

df[~df.col.isin(excl)] 414 210 514 189

df.ix[~df.col.isin(excl)] 399 212 553 189

df.query('@excl not in col') 415 228 563 206

In [208]: result.T

Out[208]:

method df[~np.in1d(df.col, excl)] df[~df.col.isin(excl)] df.ix[~df.col.isin(excl)] df.query('@excl not in col')

int32 249 414 399 415

int64 271 210 212 228

float 307 514 553 563

string 2420 189 189 206

Raw results:

int32:

In [159]: %timeit df[~np.in1d(df.int32, exclude_int32)]

1 loop, best of 3: 249 ms per loop

In [160]: %timeit df[~df.int32.isin(exclude_int32)]

1 loop, best of 3: 414 ms per loop

In [161]: %timeit df.ix[~df.int32.isin(exclude_int32)]

1 loop, best of 3: 399 ms per loop

In [162]: %timeit df.query('@exclude_int32 not in int32')

1 loop, best of 3: 415 ms per loop

int64:

In [163]: %timeit df[~np.in1d(df.int64, exclude_int64)]

1 loop, best of 3: 271 ms per loop

In [164]: %timeit df[~df.int64.isin(exclude_int64)]

1 loop, best of 3: 210 ms per loop

In [165]: %timeit df.ix[~df.int64.isin(exclude_int64)]

1 loop, best of 3: 212 ms per loop

In [166]: %timeit df.query('@exclude_int64 not in int64')

1 loop, best of 3: 228 ms per loop

float64:

In [167]: %timeit df[~np.in1d(df.float, exclude_float)]

1 loop, best of 3: 307 ms per loop

In [168]: %timeit df[~df.float.isin(exclude_float)]

1 loop, best of 3: 514 ms per loop

In [169]: %timeit df.ix[~df.float.isin(exclude_float)]

1 loop, best of 3: 553 ms per loop

In [170]: %timeit df.query('@exclude_float not in float')

1 loop, best of 3: 563 ms per loop

object / string:

In [171]: %timeit df[~np.in1d(df.string, exclude_str)]

1 loop, best of 3: 2.42 s per loop

In [172]: %timeit df[~df.string.isin(exclude_str)]

10 loops, best of 3: 189 ms per loop

In [173]: %timeit df.ix[~df.string.isin(exclude_str)]

10 loops, best of 3: 189 ms per loop

In [174]: %timeit df.query('@exclude_str not in string')

1 loop, best of 3: 206 ms per loop

Conclusion:

np.in1d() - wins for (int32 and float64) searches, but it's approx. 10 times slower (compared to others) when searching strings, so don't use it for object (strings) and for int64 dtypes!

Setup:

df = pd.DataFrame({

'int32': np.random.randint(0, 10**6, 10),

'int64': np.random.randint(10**7, 10**9, 10).astype(np.int64)*10,

'float': np.random.rand(10),

'string': np.random.choice([c*10 for c in string.ascii_uppercase], 10),

})

df = pd.concat([df] * 10**5, ignore_index=True)

exclude_str = np.random.choice([c*10 for c in string.ascii_uppercase], 100).tolist()

exclude_int32 = np.random.randint(0, 10**6, 100).tolist()

exclude_int64 = (np.random.randint(10**7, 10**9, 100).astype(np.int64)*10).tolist()

exclude_float = np.random.rand(100)

In [146]: df.shape

Out[146]: (1000000, 4)

In [147]: df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1000000 entries, 0 to 999999

Data columns (total 4 columns):

float 1000000 non-null float64

int32 1000000 non-null int32

int64 1000000 non-null int64

string 1000000 non-null object

dtypes: float64(1), int32(1), int64(1), object(1)

memory usage: 26.7+ MB

In [148]: df.head()

Out[148]:

float int32 int64 string

0 0.221662 283447 6849265910 NNNNNNNNNN

1 0.276834 455464 8785039710 AAAAAAAAAA

2 0.517846 618887 8653293710 YYYYYYYYYY

3 0.318897 363191 2223601320 PPPPPPPPPP

4 0.323926 777875 5357201380 QQQQQQQQQQ