I'm reading a multiline-record file using SparkContext.newAPIHadoopFile with a customized delimiter. Anyway, I already prepared, reduced my data. But now I want to add the key to every line (entry) again and then write it to a Apache Parquet file, which is then stored into HDFS.

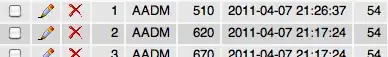

This figure should explain my problem. What I'm looking for is the red arrow e.g. the last transformation before writing the file. Any idea? I tried flatMap but then the timestamp and float-value resulted in different records.

The Python-Script can be downloaded here and the sample text file here. I'm using the Python-Code within a Jupyter Notebook.