I've had to deal with this sort of thing in the past. My solution has been to use a headless browser to programmatically navigate and manipulate the web pages that contained the resources I was interested in. I've even done fairly non-straightforward tasks like logging in and filling out and submitting forms using this method.

I can see that you're trying to use a pure R approach to download these files by reverse-engineering the GET/POST requests that are generated by the link. This could work, but it would leave your implementation highly vulnerable to any future changes in the site's design, such as changes in the JavaScript event handler, URL redirects, or header requirements.

By using a headless browser you can limit your exposure to the top-level URL and a few minimal XPath queries that allow navigation to the target link. Granted, this still ties your code to non-contractual and fairly internal details of the site's design, but it's certainly less of an exposure. This is the hazard of web scraping.

I've always used the Java HtmlUnit library for my headless browsing, which I've found to be quite excellent. Of course, to leverage a Java-based solution from Rland would require spawning a Java process, which would require (1) Java to be installed on the user's machine, (2) the $CLASSPATH to be properly set up to locate the HtmlUnit JARs as well as your custom file-downloading main class, and (3) proper invocation of the Java command with correct arguments using one of R's methods of shelling out to a system command. Needless to say, this is fairly involved and messy.

A pure R headless browsing solution would be nice, but unfortunately, it looks to me like R does not offer any native headless browsing solution. The closest is RSelenium, which appears to be just an R binding to the Java client library of the Selenium browser automation software. This means it will not operate independently of the user's GUI browser, and requires interaction with an external Java process anyway (although in this case the details of the interaction are conveniently encapsulated underneath the RSelenium API).

Using HtmlUnit, I've created a fairly generic Java main class that can be used to download a file by clicking on a link on a web page. The parameterization of the application is as follows:

- The URL of the page.

- An optional sequence of XPath expressions to allow descending into any number of nested frames starting from the top-level page. Note: I actually parse this out of the URL argument by splitting on

\s*>\s*, which I like as a concise syntax. I used the > character because it is not valid in URLs.

- A single XPath expression that specifies the anchor link to click.

- An optional file name under which to save the downloaded file. If omitted, it will be derived from either a

Content-Disposition header whose value matches the pattern filename="(.*)" (this was an unusual case I encountered when scraping icons a while back) or, failing that, the basename of the request URL that triggered the file stream response. The basename derivation method works for your target link.

Here's the code:

package com.bgoldst;

import java.util.List;

import java.util.ArrayList;

import java.io.File;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.io.OutputStream;

import java.io.IOException;

import java.util.regex.Pattern;

import java.util.regex.Matcher;

import com.gargoylesoftware.htmlunit.WebClient;

import com.gargoylesoftware.htmlunit.BrowserVersion;

import com.gargoylesoftware.htmlunit.ConfirmHandler;

import com.gargoylesoftware.htmlunit.WebWindowListener;

import com.gargoylesoftware.htmlunit.WebWindowEvent;

import com.gargoylesoftware.htmlunit.WebResponse;

import com.gargoylesoftware.htmlunit.WebRequest;

import com.gargoylesoftware.htmlunit.util.NameValuePair;

import com.gargoylesoftware.htmlunit.Page;

import com.gargoylesoftware.htmlunit.html.HtmlPage;

import com.gargoylesoftware.htmlunit.html.HtmlAnchor;

import com.gargoylesoftware.htmlunit.html.BaseFrameElement;

public class DownloadFileByXPath {

public static ConfirmHandler s_downloadConfirmHandler = null;

public static WebWindowListener s_downloadWebWindowListener = null;

public static String s_saveFile = null;

public static void main(String[] args) throws Exception {

if (args.length < 2 || args.length > 3) {

System.err.println("usage: {url}[>{framexpath}*] {anchorxpath} [{filename}]");

System.exit(1);

} // end if

String url = args[0];

String anchorXPath = args[1];

s_saveFile = args.length >= 3 ? args[2] : null;

// parse the url argument into the actual URL and optional subsequent frame xpaths

String[] fields = Pattern.compile("\\s*>\\s*").split(url);

List<String> frameXPaths = new ArrayList<String>();

if (fields.length > 1) {

url = fields[0];

for (int i = 1; i < fields.length; ++i)

frameXPaths.add(fields[i]);

} // end if

// prepare web client to handle download dialog and stream event

s_downloadConfirmHandler = new ConfirmHandler() {

public boolean handleConfirm(Page page, String message) {

return true;

}

};

s_downloadWebWindowListener = new WebWindowListener() {

public void webWindowContentChanged(WebWindowEvent event) {

WebResponse response = event.getWebWindow().getEnclosedPage().getWebResponse();

//System.out.println(response.getLoadTime());

//System.out.println(response.getStatusCode());

//System.out.println(response.getContentType());

// filter for content type

// will apply simple rejection of spurious text/html responses; could enhance this with command-line option to whitelist

String contentType = response.getResponseHeaderValue("Content-Type");

if (contentType.contains("text/html")) return;

// determine file name to use; derive dynamically from request or response headers if not specified by user

// 1: user

String saveFile = s_saveFile;

// 2: response Content-Disposition

if (saveFile == null) {

Pattern p = Pattern.compile("filename=\"(.*)\"");

Matcher m;

List<NameValuePair> headers = response.getResponseHeaders();

for (NameValuePair header : headers) {

String name = header.getName();

String value = header.getValue();

//System.out.println(name+" : "+value);

if (name.equals("Content-Disposition")) {

m = p.matcher(value);

if (m.find())

saveFile = m.group(1);

} // end if

} // end for

if (saveFile != null) saveFile = sanitizeForFileName(saveFile);

// 3: request URL

if (saveFile == null) {

WebRequest request = response.getWebRequest();

File requestFile = new File(request.getUrl().getPath());

saveFile = requestFile.getName(); // just basename

} // end if

} // end if

getFileResponse(response,saveFile);

} // end webWindowContentChanged()

public void webWindowOpened(WebWindowEvent event) {}

public void webWindowClosed(WebWindowEvent event) {}

};

// initialize browser

WebClient webClient = new WebClient(BrowserVersion.FIREFOX_45);

webClient.getOptions().setCssEnabled(false);

webClient.getOptions().setJavaScriptEnabled(true); // required for JavaScript-powered links

webClient.getOptions().setThrowExceptionOnScriptError(false);

webClient.getOptions().setThrowExceptionOnFailingStatusCode(false);

// 1: get home page

HtmlPage page;

try { page = webClient.getPage(url); } catch (IOException e) { throw new Exception("error: could not get URL \""+url+"\".",e); }

//page.getEnclosingWindow().setName("main window");

// 2: navigate through frames as specified by the user

for (int i = 0; i < frameXPaths.size(); ++i) {

String frameXPath = frameXPaths.get(i);

List<?> elemList = page.getByXPath(frameXPath);

if (elemList.size() != 1) throw new Exception("error: frame "+(i+1)+" xpath \""+frameXPath+"\" returned "+elemList.size()+" elements on page \""+page.getTitleText()+"\" >>>\n"+page.asXml()+"\n<<<.");

if (!(elemList.get(0) instanceof BaseFrameElement)) throw new Exception("error: frame "+(i+1)+" xpath \""+frameXPath+"\" returned a non-frame element on page \""+page.getTitleText()+"\" >>>\n"+page.asXml()+"\n<<<.");

BaseFrameElement frame = (BaseFrameElement)elemList.get(0);

Page enclosedPage = frame.getEnclosedPage();

if (!(enclosedPage instanceof HtmlPage)) throw new Exception("error: frame "+(i+1)+" encloses a non-HTML page.");

page = (HtmlPage)enclosedPage;

} // end for

// 3: get the target anchor element by xpath

List<?> elemList = page.getByXPath(anchorXPath);

if (elemList.size() != 1) throw new Exception("error: anchor xpath \""+anchorXPath+"\" returned "+elemList.size()+" elements on page \""+page.getTitleText()+"\" >>>\n"+page.asXml()+"\n<<<.");

if (!(elemList.get(0) instanceof HtmlAnchor)) throw new Exception("error: anchor xpath \""+anchorXPath+"\" returned a non-anchor element on page \""+page.getTitleText()+"\" >>>\n"+page.asXml()+"\n<<<.");

HtmlAnchor anchor = (HtmlAnchor)elemList.get(0);

// 4: click the target anchor with the appropriate confirmation dialog handler and content handler

webClient.setConfirmHandler(s_downloadConfirmHandler);

webClient.addWebWindowListener(s_downloadWebWindowListener);

anchor.click();

webClient.setConfirmHandler(null);

webClient.removeWebWindowListener(s_downloadWebWindowListener);

System.exit(0);

} // end main()

public static void getFileResponse(WebResponse response, String fileName ) {

InputStream inputStream = null;

OutputStream outputStream = null;

// write the inputStream to a FileOutputStream

try {

System.out.print("streaming file to disk...");

inputStream = response.getContentAsStream();

// write the inputStream to a FileOutputStream

outputStream = new FileOutputStream(new File(fileName));

int read = 0;

byte[] bytes = new byte[1024];

while ((read = inputStream.read(bytes)) != -1)

outputStream.write(bytes, 0, read);

System.out.println("done");

} catch (IOException e) {

e.printStackTrace();

} finally {

if (inputStream != null) {

try {

inputStream.close();

} catch (IOException e) {

e.printStackTrace();

} // end try-catch

} // end if

if (outputStream != null) {

try {

//outputStream.flush();

outputStream.close();

} catch (IOException e) {

e.printStackTrace();

} // end try-catch

} // end if

} // end try-catch

} // end getFileResponse()

public static String sanitizeForFileName(String unsanitizedStr) {

return unsanitizedStr.replaceAll("[^\040-\176]","_").replaceAll("[/\\<>|:*?]","_");

} // end sanitizeForFileName()

} // end class DownloadFileByXPath

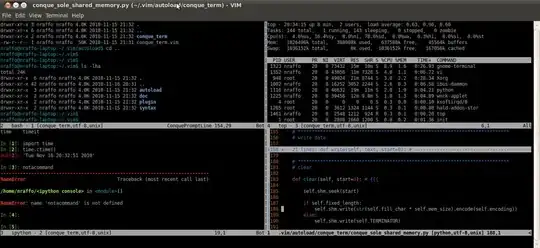

Below is a demo of me running the main class on my system. I've snipped out most of HtmlUnit's verbose output. I'll explain the command-line arguments afterward.

ls;

## bin/ src/

CLASSPATH="bin;C:/cygwin/usr/local/share/htmlunit-latest/*" java com.bgoldst.DownloadFileByXPath "http://www.worldvaluessurvey.org/WVSDocumentationWV4.jsp > //iframe[@id='frame1'] > //iframe[@id='frameDoc']" "//a[contains(text(),'WVS_2000_Questionnaire_Root')]";

## Jul 10, 2016 1:34:34 PM com.gargoylesoftware.htmlunit.IncorrectnessListenerImpl notify

## WARNING: Obsolete content type encountered: 'application/x-javascript'.

## Jul 10, 2016 1:34:34 PM com.gargoylesoftware.htmlunit.IncorrectnessListenerImpl notify

## WARNING: Obsolete content type encountered: 'application/x-javascript'.

##

## ... snip ...

##

## Jul 10, 2016 1:34:45 PM com.gargoylesoftware.htmlunit.IncorrectnessListenerImpl notify

## WARNING: Obsolete content type encountered: 'text/javascript'.

## streaming file to disk...done

##

ls;

## bin/ F00001316-WVS_2000_Questionnaire_Root.pdf* src/

CLASSPATH="bin;C:/cygwin/usr/local/share/htmlunit-latest/*" Here I set the $CLASSPATH for my system using a variable-assignment prefix (note: I was running in the Cygwin bash shell). The .class file I compiled into bin, and I've installed the HtmlUnit JARs into my Cygwin system directory structure, which is probably slightly unusual.java com.bgoldst.DownloadFileByXPath Obviously this is the command word and the name of the main class to execute."http://www.worldvaluessurvey.org/WVSDocumentationWV4.jsp > //iframe[@id='frame1'] > //iframe[@id='frameDoc']" This is the URL and frame XPath expressions. Your target link is nested under two iframes, thus requiring the two XPath expressions. You can find the id attributes in the source, either by viewing the raw HTML or using a web development tool (Firebug is my favorite)."//a[contains(text(),'WVS_2000_Questionnaire_Root')]" Finally, this is the actual XPath expression for the target link within the inner iframe.

I omitted the file name argument. As you can see, the code correctly derived the name of the file from the request URL.

I recognize that this is a lot of trouble to go through to download a file, but for web scraping in general, I really think the only robust and viable approach is to go the whole nine yards and use a full headless browser engine. It may be best to entirely separate the task of downloading these files from Rland, and instead implement the entire scraping system using a Java application, maybe supplemented with some shell scripts for a more flexible front end. Unless you're working with download URLs that were designed for no-frills one-shot HTTP requests by clients like curl, wget, and R, using R for web scraping is probably not a good idea. That's my two cents.