I'm building a client-side app that uses PDF.js to parse the contents of a selected PDF file, and I'm running into a strange issue.

Everything seems to be working great. The code successfully loads the PDF.js PDF object, which then loops through the Pages of the document, and then gets the textContent for each Page.

After I let the code below run, and inspect the data in browser tools, I'm noticing that each Page's textContent object contains the text of the entire document, not ONLY the text from the related Page.

Has anybody experienced this before?

I pulled (and modified) most of the code I'm using from PDF.js posts here, and it's pretty straight-forward and seems to perform exactly as expected, aside from this issue:

testLoop: function (event) {

var file = event.target.files[0];

var fileReader = new FileReader();

fileReader.readAsArrayBuffer(file);

fileReader.onload = function () {

var typedArray = new Uint8Array(this.result);

PDFJS.getDocument(typedArray).then(function (pdf) {

for(var i = 1; i <= pdf.numPages; i++) {

pdf.getPage(i).then(function (page) {

page.getTextContent().then(function (textContent) {

console.log(textContent);

});

});

}

});

}

},

Additionally, the size of the returned textContent objects are slightly different for each Page, even though all of the objects share a common last object - the last bit of text for the whole document.

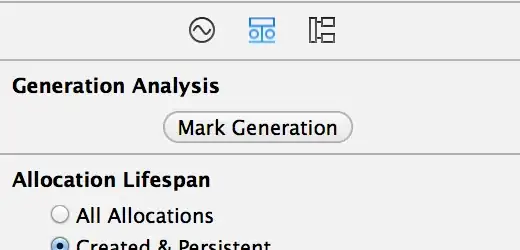

Here is an image of my inspector to illustrate that the objects are all very similarly sized.

Through manual inspection of the objects in the inspector shown, I can see that the data from, Page #1, for example, should really only consist of about ~140 array items, so why does the object for that page contain ~700 or so? And why the variation?