I want to get the most frequent occurring String in a row,in a given window, and have this value in a new row. (am using Pyspark)

This is what my table looks like.

window label value

123 a 54

123 a 45

123 a 21

123 b 99

123 b 78

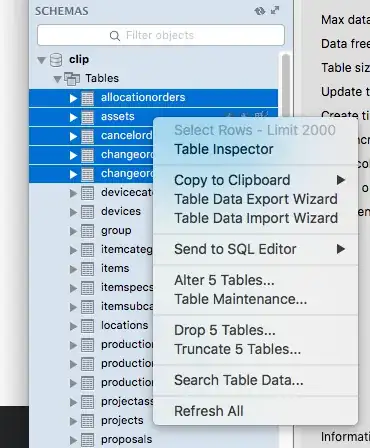

I'm doing some aggregation, and at the moment I'm grouping by both window and label.

sqlContext.sql(SELECT avg(value) as avgValue FROM table GROUP BY window, label)

This returns the average where window = 123 and label = a and the average where window = 123 and label = b

What I am trying to do, is order label by most frequently occurring string descending , so then in my sql statement I can do SELECT first(label) as majLabel, avg(value) as avgValue FROM table GROUP BY window

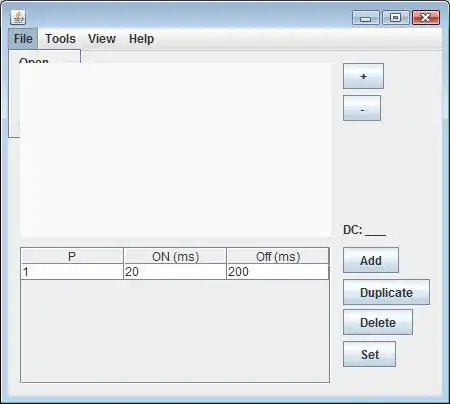

I'm trying to do this in a window function but am just not quite getting there.

group = ["window"]

w = (Window().partitionBy(*group))