I have a pandas data frame which is basically 50K X9.5K dimensions. My dataset is binary that is it has 1 and 0 only. And has lot of zeros.

Think of it as a user-item purchase data where its 1 if user purchased an item else 0. Users are rows and items are columns.

353 0 0 0 0 0 0 0 0 0 0 ... 0 0 0 0 0 0 0 0 0 0

354 0 0 0 0 0 0 0 0 0 0 ... 0 0 0 0 0 0 0 0 0 0

355 0 0 0 0 0 0 0 0 0 0 ... 0 0 0 0 0 0 0 0 0 0

356 0 0 0 0 0 0 0 0 0 0 ... 0 0 0 0 0 0 0 0 0 0

357 0 0 0 0 0 0 0 0 0 0 ... 0 0 0 0 0 0 0 0 0 0

I want to split into training, validation and test set. However it is not going to be just normal split by rows.

What I want is that for each validation and test set, I want to keep between 2-4 columns from original data which are non zero.

So basically if my original data had 9.5K columns for each user, I first keep only lets say 1500 or so columns. Then I spit this sampled data into train and test by keeping like 1495-1498 columns in train and 2-5 columns in test/validation. The columns which are in test are ONLY those which are non zero. Training can have both.

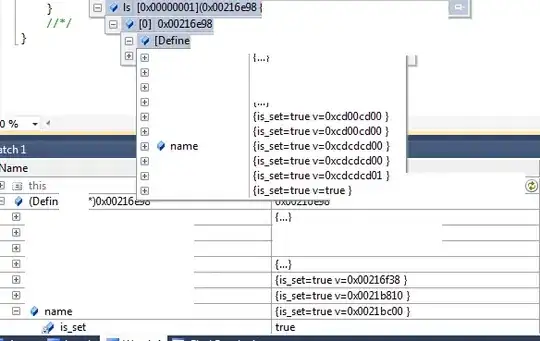

I also want to keep the item name/index corresponding to those which are retained in test/validation

I dont want to run a loop to check each cell value and put it in the next table.

Any idea?

EDIT 1:

So this is what I am trying to achieve.