I am attempting to grab a sample of data from every column in every table in about 5 schemas. Below is an example of my getting this data from just 1 schema (replace "sde" with whatever your schema is to run). This piece runs fine:

select CASE when

lead(ROWNUM) over(order by ROWNUM) is null then

'select '||''''||T.TABLE_NAME||''''||' as TABLE_NAME,'||''''||T.COLUMN_NAME||''''||' as COLUMN_NAME, cast('|| T.COLUMN_NAME ||' as

VarChar2(50)) as SAMPLE_DATA from sde.'||T.TABLE_NAME ||' where '||T.COLUMN_NAME||' is not null and ROWNUM=1;'

else

'select '||''''||T.TABLE_NAME||''''||' as TABLE_NAME,'||''''||T.COLUMN_NAME||''''||' as COLUMN_NAME, cast('|| T.COLUMN_NAME ||' as

VarChar2(50)) as SAMPLE_DATA from sde.'||T.TABLE_NAME ||' where '||T.COLUMN_NAME||' is not null and ROWNUM=1 union all' end as qry_txt

from all_tab_columns t where T.OWNER='SDE' and T.DATA_TYPE != 'BLOB' and T.DATA_TYPE != 'LONG'

ORDER BY ROWNUM asc, qry_txt asc

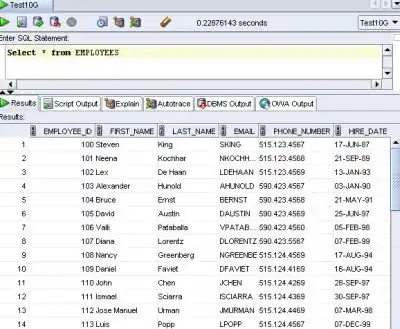

When the resulting set above is run, here is an example of 1 line of the output:

select 'HUD_TYPE' as TABLE_NAME,'HUD_TYPE_ID' as COLUMN_NAME, cast(HUD_TYPE_ID as VarChar2(50)) as SAMPLE_DATA from sde.HUD_TYPE where HUD_TYPE_ID is not null and ROWNUM=1 union all

The problem I'm facing is that when I run the complete set of unions, it never finishes, I've only been able to run a few 100 to a few 1000 lines at a time using:

select CASE when

lead(ROWNUM) over(order by ROWNUM) is null then

'select '||''''||T.TABLE_NAME||''''||' as TABLE_NAME,'||''''||T.COLUMN_NAME||''''||' as COLUMN_NAME, cast('|| T.COLUMN_NAME ||' as

VarChar2(50)) as SAMPLE_DATA from sde.'||T.TABLE_NAME ||' where '||T.COLUMN_NAME||' is not null and ROWNUM=1;'

else

'select '||''''||T.TABLE_NAME||''''||' as TABLE_NAME,'||''''||T.COLUMN_NAME||''''||' as COLUMN_NAME, cast('|| T.COLUMN_NAME ||' as

VarChar2(50)) as SAMPLE_DATA from sde.'||T.TABLE_NAME ||' where '||T.COLUMN_NAME||' is not null and ROWNUM=1 union all' end as qry_txt

from all_tab_columns t where T.OWNER='SDE' and T.DATA_TYPE != 'BLOB' and T.DATA_TYPE != 'LONG'

ORDER BY ROWNUM asc, qry_txt asc

OFFSET 4800 ROWS FETCH NEXT 400 ROWS ONLY; --Using this method so I grab the last few hundred lines so my case statement remains valid for demo

This particular schema is the smallest in the bunch with only 5,000 rows to return. Is what I'm trying to do an impossible task for an on the fly query? Or is there a way I can make this more efficient or break this up into loops to grab chunks in some way? Trying to avoid having to involve our devs and have tables created, ETLs, etc. I'm not a SQL expert, but if I'm pointed in the right direction, I can hack it out. :)

Thanks in advance.