I have two tables and I would like to append them so that only all the data in table A is retained and data from table B is only added if its key is unique (Key values are unique in table A and B however in some cases a Key will occur in both table A and B).

I think the way to do this will involve some sort of filtering join (anti-join) to get values in table B that do not occur in table A then append the two tables.

I am familiar with R and this is the code I would use to do this in R.

library("dplyr")

## Filtering join to remove values already in "TableA" from "TableB"

FilteredTableB <- anti_join(TableB,TableA, by = "Key")

## Append "FilteredTableB" to "TableA"

CombinedTable <- bind_rows(TableA,FilteredTableB)

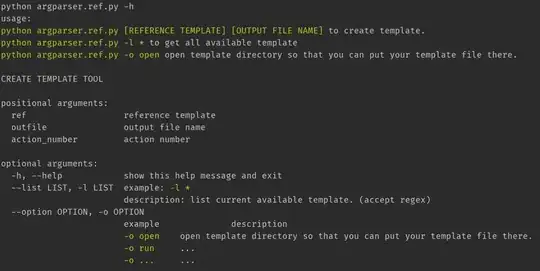

How would I achieve this in python?