From your question it is not clear whether you want to filter duplicates by row or duplicates by column. Let me describe both to make sure your question is addressed completely.

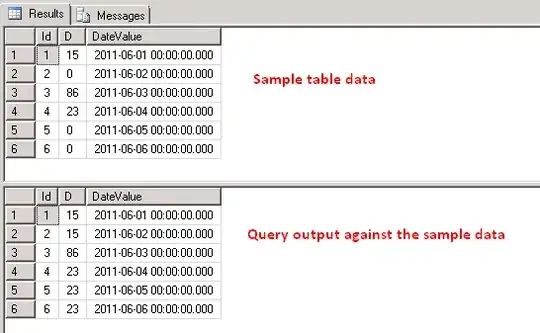

In Example 1, you can see that we have duplicate rows:

To filter them, just add the keyword DISTINCT to your query, as follows:

SELECT DISTINCT * FROM myTable;

It filters the duplicate rows and returns:

Hence, you don't need a least or greatest function in this case.

In Example 2, you can see that we have duplicates in the columns:

Here, SELECT DISTINCT * from abc will still return all 4 rows.

If we regard only the first column in the filtering, it can be achieved by the following query:

select distinct t.Col1,

(select top 1 Col2 from myTable ts where t.Col1=ts.Col1) Col2,

(select top 1 Col3 from myTable ts where t.Col1=ts.Col1) Col3

from myTable t

It will pick the first matching value in each column, so the result of the query will be:

The difference between Example 1 and this example is that it eliminated just the duplicate occurances of the values in Col1 of myTable and then returned the related values of the other columns - hence the results in Col1 and Col2 are different.

Note:

- In this case you can't just join the table

myTable because then you would be forced to list the columns in the select distinct which would return more rows then you want to have. Unfortunately, T-SQL does not offer something like SELECT DISTINCT ON(fieldname) i.e. you can't directly specify a distinct (single) fieldname.

- You might have thought "why not use GROUP BY?" The answer of that question is here: With GROUP BY you are forced to either specify all columns which is a technical DISTINCT equivalent, or you need to use aggregate functions like MIN or MAX which aren't returning what you want either.

A more advanced query (you might have seen is once before!) which has the same result is:

SELECT Col1, Col2, Col3

FROM (

SELECT *, ROW_NUMBER() OVER (PARTITION BY Col1 ORDER BY Col1) AS RowNumber

FROM myTable

) t

WHERE RowNumber=1

This statement numbers each occurance of a value on Col1 in the subquery and then takes the first of each duplicate rows - which effectively is a grouping by Col1 (but without the disadvantages of GROUP BY).

N.B. In the examples above, I am assuming a table definition like:

CREATE TABLE [dbo].[myTable](

[Col1] [nvarchar](max) NULL,

[Col2] [int] NULL,

[Col3] [int] NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

For the examples above, we don't need to declare a primary key column. But generally spoken you'll need a primary key in database tables, to be able to reference rows efficiently.

If you want to permanently delete rows not needed, you should introduce a primary key, because then you can delete the rows not displayed easily as follows (i.e. it is the inverse filter of the advanced query mentioned above):

DELETE FROM [dbo].[myTable]

WHERE myPK NOT IN

(SELECT myPK

FROM (

SELECT *, ROW_NUMBER() OVER (PARTITION BY Col1 ORDER BY Col1) AS RowNumber

FROM [dbo].[myTable]

) t

WHERE RowNumber=1 and myPK=t.myPK)

This assumes you have added an integer primary key myPK which auto-increments (you can do that via the SQL Management Studio easily by using the designer).

Or you can execute the following query to add it to the existing table:

BEGIN TRANSACTION

GO

ALTER TABLE dbo.myTable ADD

myPK int NOT NULL IDENTITY (1, 1)

GO

ALTER TABLE dbo.myTable ADD CONSTRAINT

PK_myTable PRIMARY KEY CLUSTERED (myPK)

WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF,

ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON)

ON [PRIMARY]

GO

ALTER TABLE dbo.myTable SET (LOCK_ESCALATION = TABLE)

GO

COMMIT

You can find some more examples here at MSDN.