I have been having issues reading a file that contains a mix of Arabic and Western text. I read the file into a TextBox as follows:

tbx1.Text = File.ReadAllText(fileName.Text, Encoding.UTF8);

No matter what value I tried instead of "Encoding.UTF8" I got garbled characters displayed in place of the Arabic. The western text was displayed fine.

I thought it might have been an issue with the way the TextBox was defined, but on start up I write some mixed Western/Arabic text to the textbox and this displays fine:

tbx1.Text = "Start السلا عليكم" + Environment.NewLine + "Here";

Then I opened Notepad and copied the above text into it, then saved the file, at which point Notepad save dialogue asked for which encoding to use.

I then presented the saved file to my code and it displayed all the content correctly.

I examined the file and found 3 binary bytes at the beginning (not visible in Notepad):

The 3 bytes, I subsequently found through research represent the BOM, and this enables the C# "File.ReadAllText(fileName.Text, Encoding.UTF8);" to read/display the data as desired.

What puzzles me is specifying the " Encoding.UTF8" value should take care of this.

The only way I can think is to code up a step to add this data to a copy of teh file, then process that file. But this seems rather long-winded. Just wondering if there is a better way to do, or why the Encoding.UTF8 is not yielding the desired result.

Edit:

Still no luck despite trying the suggestion in the answer.

I cut the test data down to containing just Arabic as follows:

Code as follows:

FileStream fs = new FileStream(fileName.Text, FileMode.Open);

StreamReader sr = new StreamReader(fs, Encoding.UTF8, false);

tbx1.Text = sr.ReadToEnd();

sr.Close();

fs.Close();

Tried with both "true" and "false" on the 2nd line, but both give the same result.

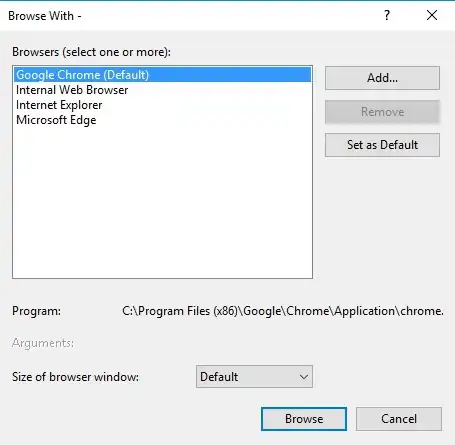

If I open the file in Notepad++, and specify the Arabic ISO-8859-6 Character set it displays fine.

Here is what is looks like in Notepad++ (and what I would liek the textbox to display):

Not sure if the issue is in the reading from file, or the writing to the textbox.

I will try inspecting the data post read to see. But at the moment, I'm puzzled.