Alternatively, we can plot the functions with apply rather than the for loop. First, let's read in data and the range of the values to plot.

dt <- read.table(text="color a b

red 3 1.3

blue 9 1.8

purple 13 1.2

green 4 1.1

orange 7 0.9

yellow 6 2.1

brown 7 1.8", header=T)

# vector for x values

x1 <- 0

x2 <- 1

n <- 100

x <- seq(x1, x2, by = ((x2-x1)/n))

Then, we plot an empty space into which the function curves will be plotted. Note, that we calculate the maximum needed for the y axis in the max.y variable.

max.y <- dt[which.max(rowSums(dt[,2:3])),2] * (x2 ^ dt[which.max(rowSums(dt[,2:3])),3])

plot(x1, type="n", xlim=c(x1,x2), ylim=c(x1, max.y), xlab="x", ylab="a*(x^b)")

And finally, we plot the lines according to the specifications in the data.

apply(dt, 1, FUN=function(dt.row)

lines(x, as.numeric(dt.row[2]) * (x ^ as.numeric(dt.row[3])), col=dt.row[1]))

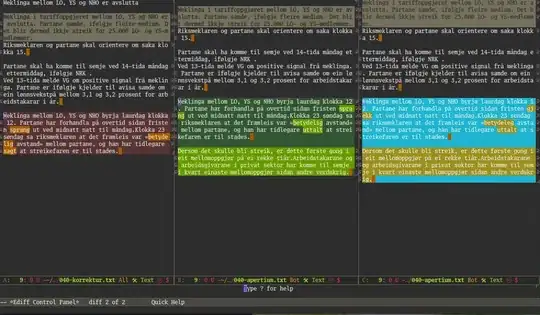

Benchmarking

As per Roland's and shayaa's comments, here is the performance comparison. The whole answers from Roland and shayaa were fed into the respective functions, and everything from here starting from the x1 variable definition into the nya() function.

library(microbenchmark)

microbenchmark(roland(dt))

Unit: milliseconds

expr min lq mean median uq max neval

roland(dt) 18.09634 18.4124 19.2258 18.67313 19.17735 35.42943 100

microbenchmark(shayaa(dt=dt))

Unit: milliseconds

expr min lq mean median uq max neval

shayaa(dt = dt) 10.85788 11.04311 11.44358 11.22057 11.51216 18.19182 100

microbenchmark(nya(dt=dt))

Unit: milliseconds

expr min lq mean median uq max neval

nya(dt = dt) 18.26883 18.61892 19.02823 18.8229 19.18054 25.41353 100

I would conclude that in this case, performance is not a critical issue. The user might choose an approach based on personal preference and amend it with dynamic options for plot size (x1, x2, max.y).