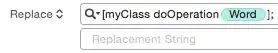

I am building a relatively simple Three.js Application.

Outline: You move with your camera along a path through a "world". The world is all made up from simple Sprites with SpriteMaterials with transparent textures on it. The textures are basically GIF images with alpha transparency.

The whole application works fine and also the performance is quite good. I reduced the camera depth as low as possible so objects are only rendered quite close.

My problem is: i have many different "objects" (all sprites) with different many textures. I reuse the Textures/Materials reference for the same type of elements that are used multiple times in the scene (like trees and rocks).

Still, i'm getting to the point where memory usage is going up too much (above 2GB) due to all the textures used.

Now, when moving through the world, not all objects are visible/displayed from the beginning, even though i add all the sprites to the scene from the very start. Checking the console, the objects not visible and its textures are only loaded when "moving" further into the world when new elements actually are visible in the frustum. The, also the memory usage goes gradually up and up.

I cannot really us "object pooling" for building the world due to its "layout" lets say.

To test, i added a function that removes objects from the scene and disposes their material.map as soon as the camera passed by. Sth like

this.env_sprites[i].material.map.dispose();

this.env_sprites[i].material.dispose();

this.env_sprites[i].geometry.dispose();

this.scene.remove(this.env_sprites[i]);

this.env_sprites.splice(i,1);

This works for the garbage collection and frees up memory again. My problem is then, when moving backwards with the camera, the Sprites would need to be readded to the scene and the materials/texture loaded again, which is quite heavy for performance and does not seem the right approach to me.

Is there a known technique on how to deal with such a setup in regards to memory management and "removing" and adding objects and textures again (in the same place)?

I hope i could explain the issue well enough.

This is how the "World" looks like to give you an impression: