I am new to Airflow. I am following a tutorial and written following code.

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime, timedelta

from models.correctness_prediction import CorrectnessPrediction

default_args = {

'owner': 'abc',

'depends_on_past': False,

'start_date': datetime.now(),

'email': ['abc@xyz.com'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5)

}

def correctness_prediction(arg):

CorrectnessPrediction.train()

dag = DAG('daily_processing', default_args=default_args)

task_1 = PythonOperator(

task_id='print_the_context',

provide_context=True,

python_callable=correctness_prediction,

dag=dag)

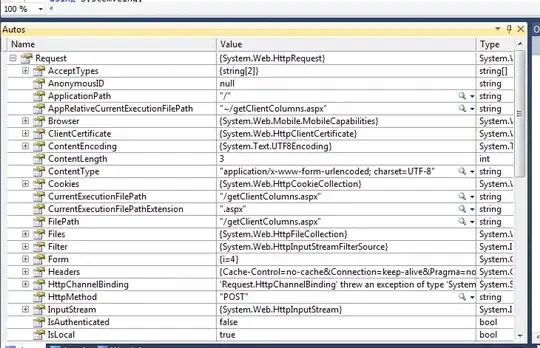

On running the script, it doesn't show any errors but when I check for dags in Web-UI it doesn't show under Menu->DAGs

But I can see the scheduled job under Menu->Browse->Jobs

I also cannot see anything in $AIRFLOW_HOME/dags. Is it supposed to be like this only? Can someone explain why?