It may be because Set is relatively new to JavaScript but I haven't been able to find an article, on Stack Overflow or anywhere else, that talks about the performance difference between the two in JavaScript. So, what is the difference, in terms of performance, between the two? Specifically, when it comes to removing, adding and iterating.

- 29,855

- 23

- 108

- 144

- 5,963

- 3

- 31

- 58

-

1You cannot use them interchangeably. So it makes very little sense to compare them. – zerkms Aug 17 '16 at 23:23

-

are you talking about comparison between `Set` and `[]` or `{}`? – eithed Aug 17 '16 at 23:24

-

2Adding and iterating don't make much difference, removing and - most importantly - lookup do make a difference. – Bergi Aug 17 '16 at 23:37

-

possible duplicate of [Javascript ES6 computational/time complexity of collections](http://stackoverflow.com/q/31091772/1048572) – Bergi Aug 17 '16 at 23:38

-

@zerkms Why couldn't you use them interchangeably? I mean, in some cases I guess you couldn't (if you needed your list of values ordered or to be able to lookup a specific value, or had a list of duplicate values) but if that is not the case you could use either a Set or an Array as a data collection. Unless there is something I don't understand. – snowfrogdev Aug 18 '16 at 00:05

-

"Why couldn't you use them interchangeably? I mean, in some cases I guess you couldn't" --- this does not make sense to me either. They are designed for different types of jobs. You can cut a branch of a tree with a spoon indeed, but would you compare a spoon and a chain saw? `Set` is not ordered. `Array` is ordered. Not sure how you can swap them in any scenario at all. – zerkms Aug 18 '16 at 00:09

-

3@zerkms—strictly, Array's aren't ordered either, but their use of an *index* allows them to be treated as if they are. ;-) The sequence of values in a Set are kept in insertion order. – RobG Aug 18 '16 at 01:08

-

9@zerkms Its absurd to say comparing them makes "little sense". They are both collections. An array can absolutely be used in place of a set, and was for the like 20 years where Set didn't exist in javascript. – B T Jun 23 '20 at 17:18

-

@BT given both are available: it makes no sense to compare them. You need one or the other. Their time complexity is standardised, use that. The answers didn't reveal anything that is not in the standard. – zerkms Jun 24 '20 at 00:25

7 Answers

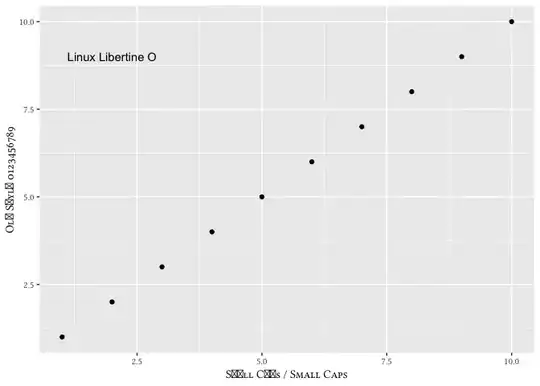

Ok, I have tested adding, iterating and removing elements from both an array and a set. I ran a "small" test, using 10 000 elements and a "big" test, using 100 000 elements. Here are the results.

Adding elements to a collection

It would seem that the .push array method is about 4 times faster than the .add set method, no matter the number of elements being added.

Iterating over and modifying elements in a collection

For this part of the test I used a for loop to iterate over the array and a for of loop to iterate over the set. Again, iterating over the array was faster. This time it would seem that it is exponentially so as it took twice as long during the "small" tests and almost four times longer during the "big" tests.

Removing elements from a collection

Now this is where it gets interesting. I used a combination of a for loop and .splice to remove some elements from the array and I used for of and .delete to remove some elements from the set. For the "small" tests, it was about three times faster to remove items from the set (2.6 ms vs 7.1 ms) but things changed drastically for the "big" test where it took 1955.1 ms to remove items from the array while it only took 83.6 ms to remove them from the set, 23 times faster.

Conclusions

At 10k elements, both tests ran comparable times (array: 16.6 ms, set: 20.7 ms) but when dealing with 100k elements, the set was the clear winner (array: 1974.8 ms, set: 83.6 ms) but only because of the removing operation. Otherwise the array was faster. I couldn't say exactly why that is.

I played around with some hybrid scenarios where an array was created and populated and then converted into a set where some elements would be removed, the set would then be reconverted into an array. Although doing this will give much better performance than removing elements in the array, the additional processing time needed to transfer to and from a set outweighs the gains of populating an array instead of a set. In the end, it is faster to only deal with a set. Still, it is an interesting idea, that if one chooses to use an array as a data collection for some big data that doesn't have duplicates, it could be advantageous performance wise, if there is ever a need to remove many elements in one operation, to convert the array to a set, perform the removal operation, and convert the set back to an array.

Array code:

var timer = function(name) {

var start = new Date();

return {

stop: function() {

var end = new Date();

var time = end.getTime() - start.getTime();

console.log('Timer:', name, 'finished in', time, 'ms');

}

}

};

var getRandom = function(min, max) {

return Math.random() * (max - min) + min;

};

var lastNames = ['SMITH', 'JOHNSON', 'WILLIAMS', 'JONES', 'BROWN', 'DAVIS', 'MILLER', 'WILSON', 'MOORE', 'TAYLOR', 'ANDERSON', 'THOMAS'];

var genLastName = function() {

var index = Math.round(getRandom(0, lastNames.length - 1));

return lastNames[index];

};

var sex = ["Male", "Female"];

var genSex = function() {

var index = Math.round(getRandom(0, sex.length - 1));

return sex[index];

};

var Person = function() {

this.name = genLastName();

this.age = Math.round(getRandom(0, 100))

this.sex = "Male"

};

var genPersons = function() {

for (var i = 0; i < 100000; i++)

personArray.push(new Person());

};

var changeSex = function() {

for (var i = 0; i < personArray.length; i++) {

personArray[i].sex = genSex();

}

};

var deleteMale = function() {

for (var i = 0; i < personArray.length; i++) {

if (personArray[i].sex === "Male") {

personArray.splice(i, 1)

i--

}

}

};

var t = timer("Array");

var personArray = [];

genPersons();

changeSex();

deleteMale();

t.stop();

console.log("Done! There are " + personArray.length + " persons.")Set code:

var timer = function(name) {

var start = new Date();

return {

stop: function() {

var end = new Date();

var time = end.getTime() - start.getTime();

console.log('Timer:', name, 'finished in', time, 'ms');

}

}

};

var getRandom = function (min, max) {

return Math.random() * (max - min) + min;

};

var lastNames = ['SMITH','JOHNSON','WILLIAMS','JONES','BROWN','DAVIS','MILLER','WILSON','MOORE','TAYLOR','ANDERSON','THOMAS'];

var genLastName = function() {

var index = Math.round(getRandom(0, lastNames.length - 1));

return lastNames[index];

};

var sex = ["Male", "Female"];

var genSex = function() {

var index = Math.round(getRandom(0, sex.length - 1));

return sex[index];

};

var Person = function() {

this.name = genLastName();

this.age = Math.round(getRandom(0,100))

this.sex = "Male"

};

var genPersons = function() {

for (var i = 0; i < 100000; i++)

personSet.add(new Person());

};

var changeSex = function() {

for (var key of personSet) {

key.sex = genSex();

}

};

var deleteMale = function() {

for (var key of personSet) {

if (key.sex === "Male") {

personSet.delete(key)

}

}

};

var t = timer("Set");

var personSet = new Set();

genPersons();

changeSex();

deleteMale();

t.stop();

console.log("Done! There are " + personSet.size + " persons.")- 5,963

- 3

- 31

- 58

-

1Keep in mind, the values of a set are unique by default. So, where as `[1,1,1,1,1,1]` for an array would have length 6, a set would have size 1. Looks like your code could actually be generating sets of wildly differing sizes than 100,000 items in size on each run because of this trait of Sets. You probably never noticed because you aren't showing the size of the set until after the entire script is run. – KyleFarris Oct 24 '16 at 20:21

-

6@KyleFarris Unless I am mistaken, this would be true if there were duplicates in the set, like in your example `[1, 1, 1, 1, 1]`, but since each item in the set is actually an object with various properties including a first name and last name randomly generated from a list of hundreds of possible names, a randomly generated age, a randomly generated sex and other randomly generated attributes... the odds of having two identical objects in the sets are slim to none. – snowfrogdev Nov 01 '16 at 15:59

-

4Actually, you're right in this case because it seems Sets don't actually differentiate from objects in the set. So, indeed you could even have the same exact object `{foo: 'bar'}` 10,000x in the set and it would have a size of 10,000. Same goes for arrays. It seems it's only unique with scalar values (strings, numbers, booleans, etc..). – KyleFarris Jan 11 '17 at 21:15

-

Curious if you'd be willing to extend this to cover 'WeakSet' as well? – Eric Hodonsky Aug 10 '17 at 18:23

-

Ask a new question and let me know. I'll write up a new answer to compare WeakSet performance with whatever else you want. – snowfrogdev Aug 10 '17 at 20:58

-

22You could have the same exact *content of an object* `{foo: 'bar'}` many times in the Set, but not the *exact same object* (reference). Worth pointing out the subtle difference IMO – SimpleVar Sep 09 '17 at 09:32

-

64You forgot the measure the most important reason to use a Set, the 0(1) lookup. `has` vs `IndexOf`. – Magnus Aug 29 '18 at 06:32

-

1@Magnus I didn't forget. The question asked "Specifically, when it comes to removing, adding and iterating" so I tested for those. But you are absolutely right that one of Sets' advantage over other collections is lookup time. It is so obvious that I don't even think we need to test for it. Although it could be fun to find out just how much faster they are. – snowfrogdev Aug 29 '18 at 11:09

-

1I have been searching on performance of Map vs obj, and Set vs Array for a little bit today (late 2018), and it seems that all of what I'm finding was written on the topic in 2016, and comes to vastly different conclusions than what we know today. The examples in this setup run in 15,000ms and 50ms, respectively, for me. I think that Set and Map access speed has changed drastically in the last 2 years. – Eric Blade Nov 12 '18 at 07:32

-

1I think it's pretty clear why the Set would be faster at removals; the array has to copy all the elements in linear time to do that and the Set doesn't. – Casey Jul 31 '20 at 14:14

-

If you replace the splice function by a simple array filter, you will see that from 6kms you will get around 40ms. `var deleteMale = () => personArray = personArray.filter(e => e.sex !== 'Male');` – Pablo Werlang Oct 18 '22 at 21:32

-

As @Casey says splicing needs to copy all the elements especially since the code is deleting elements from the middle of the array. I think it could be interesting to see whether the same would be true for `pop` that only deletes from the end of the array (and also for `splice` only from the end or the front). There's an optimization that can be done when only deleting from the end of a dynamically sized array (implemented with a fixed sized array internally) where you only copy the array when the number of elements inside is fewer than half the size of fixed array. – louisch Nov 09 '22 at 07:20

OBSERVATIONS:

- Set operations can be understood as snapshots within the execution stream.

- We are not before a definitive substitute.

- The elements of a Set class have no accessible indexes.

- Set class is an Array class complement, useful in those scenarios where we need to store a collection on which to apply basic addition, Deletion, checking and iteration operations.

I share some test of performance. Try to open your console and copypaste the code below.

Creating an array (125000)

var n = 125000;

var arr = Array.apply( null, Array( n ) ).map( ( x, i ) => i );

console.info( arr.length ); // 125000

1. Locating an Index

We compared the has method of Set with Array indexOf:

Array/indexOf (0.281ms) | Set/has (0.053ms)

// Helpers

var checkArr = ( arr, item ) => arr.indexOf( item ) !== -1;

var checkSet = ( set, item ) => set.has( item );

// Vars

var set, result;

console.time( 'timeTest' );

result = checkArr( arr, 123123 );

console.timeEnd( 'timeTest' );

set = new Set( arr );

console.time( 'timeTest' );

checkSet( set, 123123 );

console.timeEnd( 'timeTest' );

2. Adding a new element

We compare the add and push methods of the Set and Array objects respectively:

Array/push (1.612ms) | Set/add (0.006ms)

console.time( 'timeTest' );

arr.push( n + 1 );

console.timeEnd( 'timeTest' );

set = new Set( arr );

console.time( 'timeTest' );

set.add( n + 1 );

console.timeEnd( 'timeTest' );

console.info( arr.length ); // 125001

console.info( set.size ); // 125001

3. Deleting an element

When deleting elements, we have to keep in mind that Array and Set do not start under equal conditions. Array does not have a native method, so an external function is necessary.

Array/deleteFromArr (0.356ms) | Set/remove (0.019ms)

var deleteFromArr = ( arr, item ) => {

var i = arr.indexOf( item );

i !== -1 && arr.splice( i, 1 );

};

console.time( 'timeTest' );

deleteFromArr( arr, 123123 );

console.timeEnd( 'timeTest' );

set = new Set( arr );

console.time( 'timeTest' );

set.delete( 123123 );

console.timeEnd( 'timeTest' );

Read the full article here

- 4,813

- 5

- 40

- 48

-

5Array.indexOf should be Array.includes for them to be equivalent. I'm getting very different numbers on Firefox. – kagronick Jul 10 '19 at 19:16

-

4I would be interested in the Object.includes vs. Set.has comparison... – Leopold Kristjansson Jan 16 '20 at 09:17

-

2@LeopoldKristjansson I didn't write a comparison test, but we did timings in a production site with arrays with 24k items and switching from Array.includes to Set.has was a tremendous performance boost! – sedot Oct 27 '20 at 16:51

-

If you want to recommend your own product or website, there are some [guidelines in place](/help/promotion) for doing so. Following them will help you avoid giving the impression that you're spamming. Please [edit] your post to explicitly state your affiliation. (If you're not actually affiliated, it may be worth mentioning that as well.) – TheMaster Oct 23 '22 at 16:30

-

These benchmarks are not very representative. For searching and deleting, it is the worst case scenario for array (element near end), rather than average case. For set addition/deletion, you are only testing when the value is present/not-present respectively, so the Set isn't getting modified at all. The benchmarks make Set look much better than array, when in a real scenario, the difference will not be this dramatic. – Azmisov Feb 20 '23 at 21:36

Just the Property Lookup, little or zero writes

If property lookup is your main concern, here are some numbers.

JSBench tests https://jsbench.me/3pkjlwzhbr/1

// https://jsbench.me/3pkjlwzhbr/1

// https://docs.google.com/spreadsheets/d/1WucECh5uHlKGCCGYvEKn6ORrQ_9RS6BubO208nXkozk/edit?usp=sharing

// JSBench forked from https://jsbench.me/irkhdxnoqa/2

var theArr = Array.from({ length: 10000 }, (_, el) => el)

var theSet = new Set(theArr)

var theObject = Object.assign({}, ...theArr.map(num => ({ [num]: true })))

var theMap = new Map(theArr.map(num => [num, true]))

var theTarget = 9000

// Array

function isTargetThereFor(arr, target) {

const len = arr.length

for (let i = 0; i < len; i++) {

if (arr[i] === target) {

return true

}

}

return false

}

function isTargetThereForReverse(arr, target) {

const len = arr.length

for (let i = len; i > 0; i--) {

if (arr[i] === target) {

return true

}

}

return false

}

function isTargetThereIncludes(arr, target) {

return arr.includes(target)

}

// Set

function isTargetThereSet(numberSet, target) {

return numberSet.has(target)

}

// Object

function isTargetThereHasOwnProperty(obj, target) {

return obj.hasOwnProperty(target)

}

function isTargetThereIn(obj, target) {

return target in obj

}

function isTargetThereSelectKey(obj, target) {

return obj[target]

}

// Map

function isTargetThereMap(numberMap, target) {

return numberMap.has(target)

}forloopforloop (reversed)array.includes(target)

set.has(target)

obj.hasOwnProperty(target)target in obj<- 1.29% slowerobj[target]<- fastest

map.has(target)<- 2.94% slower

Results from other browsers are most welcome, please update this answer.

You can use this spreadsheet to make a nice screenshot.

JSBench test forked from Zargold's answer.

- 29,062

- 22

- 108

- 136

-

-

1@EdmundoDelGusto Yes, the higher Average the better. Also "Perf" stands for performance, the best one is rated at 100%. You can also run the tests (the jsbench link above) and see for yourself. – Qwerty Feb 22 '22 at 14:50

For the iteration part of your question, I recently ran this test and found that Set much outperformed an Array of 10,000 items (around 10x the operations could happen in the same timeframe). And depending on the browser either beat or lost to Object.hasOwnProperty in a like for like test. Another interesting point is that Objects do not have officially guaranteed order, whereas Set in JavaScript is implemented as an OrderedSet and does maintain the order of insertion.

Both Set and Object have their "has" method performing in what seems to be amortized to O(1), but depending on the browser's implementation a single operation could take longer or faster. It seems that most browsers implement key in Object faster than Set.has(). Even Object.hasOwnProperty which includes an additional check on the key is about 5% faster than Set.has() at least for me on Chrome v86.

https://jsperf.com/set-has-vs-object-hasownproperty-vs-array-includes/1

Update: 11/11/2020: https://jsbench.me/irkhdxnoqa/2

In case you want to run your own tests with different browsers/environments. At this period of time (when this test ran): Chrome's V8 clearly only optimized for Objects: The following is a snapshot for Chrome v86 in November 2020.

- For loop: 104167.14 ops/s ‡ 0.22% Slowest

- Array.includes: 111524.8 ops/s ‡ 0.24% 1.07x more ops/s than for loop (9k iterations for both)

- For loop reversed: 218074.48 ops/s ‡ 0.59% 1.96x more ops/s than non-reversed Array.includes (9k iterations)

Set.has: 154744804.61 ops/s ‡ 1.88% 709.6x more ops/s than for loop reverse (only 1k iterations since target is on right side)hasOwnProperty: 161399953.02 ops/s ‡ 1.81% 1.043x more ops/s thanSet.haskey in myObject: 883055194.54 ops/s ‡ 2.08% ... 5x more ops/sec thanmyObject.hasOwnProperty.

Update: 11/10/2022: I re-ran (2 years after my original image) the same tests on Safari and Chrome today and had some interesting results: TLDR Set is equally fast if not faster than using key in Object and way faster than using Object.hasOwnProperty for both browsers. Chrome also has somehow dramatically optimized Array.includes to the extent that it is in the same realm of speed as Object/Set look up time (whereas for loops take 1000+x longer to complete).

For Safari Set is significantly faster than key in Object and Object.hasOwnProperty is barely in the same realm of speed. All array variants (for loops/includes) are as expected dramatically slower than set/object look ups.

Snapshot 11/10/2022: Tested On Safari v16.1 Operations per second (higher = faster):

mySet.has(key): 1,550,924,292.31key in myObject: 942,192,599.63 (39.25% slower aka using Set you can perform around 1.6x more operations per secondmyObject.hasOwnProperty(key): 21,363,224.51 (98.62% slower) aka you can perform about 72.6x more Set.has operations as hasOwnProperty checks in 1 second.- Reverse For loop 619,876.17 ops/s (target is 9,000 out of 10,000-so reverse for loop means iterating only 1,000 times vs 9,000) meaning you can do 2502x more Set look ups than for loop checks even when you know the item's position is advantageous.

- for loop: 137,434 ops/s: as expected is even slower but surprisingly not much slower: Reverse for loop which involves 1/9th the loop iterations is only about 4.5x faster than for loop.

Array.includes(target)111,076 ops/s is a bit slower still than the for loop manually checking for target you can perform 1.23x checks manually for each check of includes.

On Chrome v107.0.5304.87 11/10/2022: It is no longer true that Set significantly underperforms Object in operation: they now nearly tie. (Though the expected behavior is that set would outperform Object in due to the smaller possible of options with a set vs an object and how this is the behavior in Safari.) Notably impressive Array.includes has apparently been significantly optimized in Chrome (v8) for at least this type of test:

Object infinished 792894327.81 ops/s ‡ 2.51% FastestSet.prototype.hasfinished 790523864.11 ops/s ‡ 2.22% FastestArray.prototype.includesfinished 679373215.29 ops/s ‡ 1.82% 14.32% slowerObject.hasOwnPropertyfinished 154217006.71 ops/s ‡ 1.31% 80.55% slowerfor loopfinished 103015.26 ops/s + 0.98% 99.99% slower

- 1,892

- 18

- 24

-

4Please don't use links in your answers (unless linked to an official libraries) since these links could be broken - as happened in your case. You link is 404. – Gil Epshtain Feb 17 '19 at 09:48

-

I used a link but also copied the output when it was available. It's unfortunate that they changed their linking strategy so quickly. – Zargold Feb 19 '19 at 04:59

-

Updated the post now with a screenshot and a new JS performance website: https://jsbench.me – Zargold Nov 11 '20 at 22:21

-

1I wrote why Set.has() is slower in here: https://stackoverflow.com/a/69338420/1474113 TL;DR: Because V8 does not optimize Set.has() much. – ypresto Sep 26 '21 at 20:03

My observation is that a Set is always better with two pitfalls for large arrays in mind :

a) The creation of Sets from Arrays must be done in a for loop with a precached length.

slow (e.g. 18ms) new Set(largeArray)

fast (e.g. 6ms)

const SET = new Set();

const L = largeArray.length;

for(var i = 0; i<L; i++) { SET.add(largeArray[i]) }

b) Iterating could be done in the same way because it is also faster than a for of loop ...

See https://jsfiddle.net/0j2gkae7/5/

for a real life comparison to

difference(), intersection(), union() and uniq() ( + their iteratee companions etc.) with 40.000 elements

- 4,278

- 2

- 37

- 36

Let's consider the case where you want to maintain a set of unique values. You can emulate nearly all the Set operations using an array: add, delete, has, clear, and size.

We can implement iteration as well, but note that it will not behave quite the same as Set. A Set you can safely modify during iteration without skipping elements, but the same is not true of an array. So the comparison there is not quite fair for all use cases.

/** Set implemented using an array internally */

class ArraySet{

constructor(){

this._arr = [];

}

add(value){

if (this._arr.indexOf(value) === -1)

this._arr.push(value);

return this;

}

delete(value){

const idx = this._arr.indexOf(value);

if (idx !== -1){

this._arr.splice(idx,1);

return true;

}

return false;

}

has(value){

return this._arr.indexOf(value) !== -1;

}

clear(){

this._arr.length = 0;

}

// Note: iterating is not safe from modifications

values(){

return this._arr.values();

}

[Symbol.iterator](){

return this._arr[Symbol.iterator]();

}

}

While Set has better algorithmic complexity (O(1) vs ArraySet's O(n) operations), it likely has a bit more overhead in maintaining its internal tree/hash. At what size does the overhead of the Set become worth it? Here is the data I gathered from benchmarking NodeJS v18.12 for an average use case (see benchmarking code):

As expected, we see the O(n) algorithmic advantage for Set for many elements. As for overhead:

size: equal speedadd: An interesting pattern emerges, where a Set's performance oscillates depending on its size. In general an array will be faster for < 33 elements, and a Set always faster for > 52 elements.delete: Array is faster for < 10 elementshas: Array is faster for < 20 elementsvalues: Set is always fasteriterator(e.g.for-ofloop): Set is always faster

In practice, you likely are not going to create your own ArraySet class, and instead will just inline the particular operation you're interested in. Assuming the array operations are inlined, how does the performance change?

The results are nearly identical, except now the iterating performance has improved for Array. An inlined for-of loop (not going through the ArraySet wrapper class) is now always faster than a Set. The values iterator is roughly equal speed.

- 6,493

- 7

- 53

- 70

console.time("set")

var s = new Set()

for(var i = 0; i < 10000; i++)

s.add(Math.random())

s.forEach(function(e){

s.delete(e)

})

console.timeEnd("set")

console.time("array")

var s = new Array()

for(var i = 0; i < 10000; i++)

s.push(Math.random())

s.forEach(function(e,i){

s.splice(i)

})

console.timeEnd("array")

Those three operations on 10K items gave me:

set: 7.787ms

array: 2.388ms

- 3,315

- 2

- 22

- 34

-

-

1@zerkms: Define "work" :-) Yes, the array will be empty after the `forEach`, but probably not in the way you expected. If one wants comparable behaviour, it should be `s.forEach(function(e) { s.clear(); })` as well. – Bergi Aug 17 '16 at 23:43

-

1Well, it does something, just not what is intended: it deletes all elements between index *i* and the end. That does not compare to what the `delete` does on the Set. – trincot Aug 17 '16 at 23:43

-

-

4

-

…and then it actually will keep iterating till 10K, looking whether the array does have any more indices. Unless that is optimised away. – Bergi Aug 17 '16 at 23:47