I have an android game that has 40,000 users online. And each user send request to server every 5 second.

I write this code for test request:

const express = require('express')

const app = express()

const pg = require('pg')

const conString = 'postgres://postgres:123456@localhost/dbtest'

app.get('/', function (req, res, next) {

pg.connect(conString, function (err, client, done) {

if (err) {

return next(err)

}

client.query('SELECT name, age FROM users limit 1;', [], function (err, result) {

done()

if (err) {

return next(err)

}

res.json(result.rows)

})

})

})

app.listen(3000)

And for test this code with 40,000 requests I write this ajax code:

for (var i = 0; i < 40000; i++) {

var j = 1;

$.ajax({

url: "http://85.185.161.139:3001/",

success: function(reponse) {

var d = new Date();

console.log(j++, d.getHours() + ":" + d.getMinutes() + ":" + d.getSeconds());

}

});

}

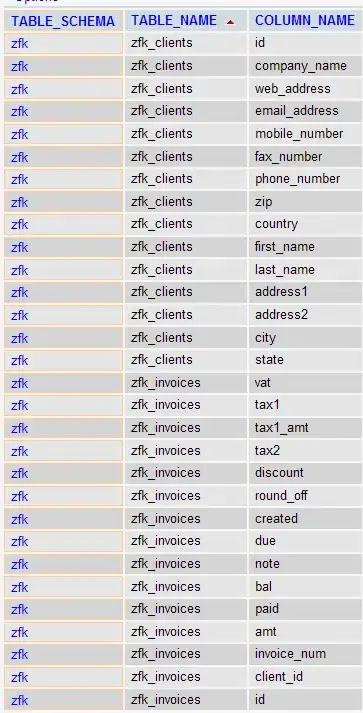

SERVER detail(I know this is poor)

Questions:

this code (node js)only response 200 requests per second!

how can improve my code for increase number response per second?

this way(ajax) for simulate 40,000 online users is correct or not?

if i use socket is better or not?