The two main areas to look at are object retention and native leaks. This holds true for most garbage collected languages running on a VM

Most likely, there is something in your app or modules that are retaining references to objects and filling up the object space.

Next are modules that use native code and leak native memory, which won't show up in the GC object space.

Then Node.js itself may have a native leak, this is unlikely due to the large number of users, but it's always a possibility, especially with older versions of Node.js.

Application Memory and Garbage Collection

Run your application with nodes garbage collection logging turned on

node --trace_gc --trace_gc_verbose app.js

This will provide blocks of information on each GC event. The main info is the first line which tells you how much memory Node.js was using before and -> after the GC.

[97577:0x101804a00] 38841 ms: Scavenge 369.0 (412.2) -> 356.0 (414.2) MB, 5.4 / 0 ms [allocation failure].

The --trace_gc_verbose gives you all the lines after this with more detail on each memory space.

[97577:0x101804a00] Memory allocator, used: 424180 KB, available: 1074956 KB

[97577:0x101804a00] New space, used: 789 KB, available: 15334 KB, committed: 32248 KB

[97577:0x101804a00] Old space, used: 311482 KB, available: 0 KB, committed: 321328 KB

[97577:0x101804a00] Code space, used: 22697 KB, available: 3117 KB, committed: 26170 KB

[97577:0x101804a00] Map space, used: 15031 KB, available: 3273 KB, committed: 19209 KB

[97577:0x101804a00] Large object space, used: 14497 KB, available: 1073915 KB, committed: 14640 KB

[97577:0x101804a00] All spaces, used: 364498 KB, available: 1095640 KB, committed: 413596 KB

[97577:0x101804a00] External memory reported: 19448 KB

[97577:0x101804a00] Total time spent in GC : 944.0 ms

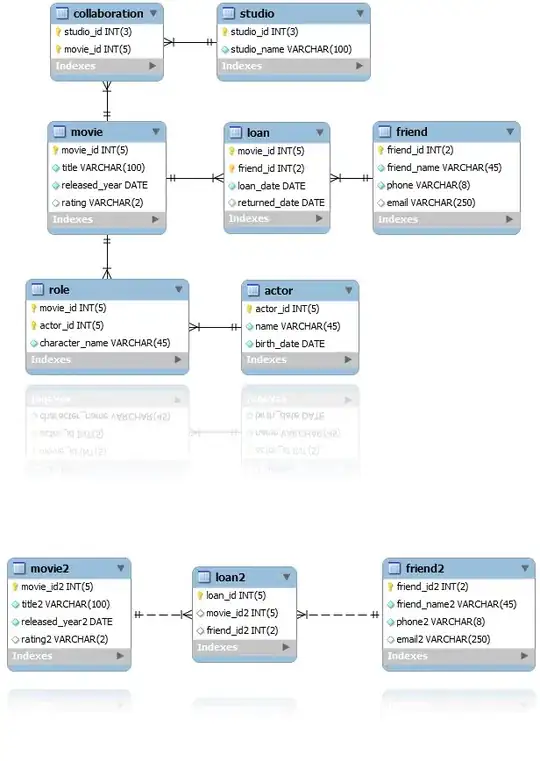

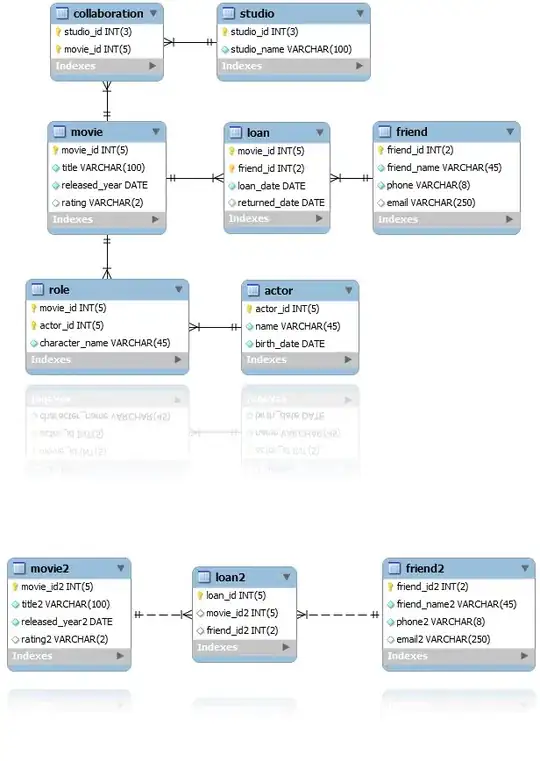

Graphing these values normally looks like a sawtooth. This "sawtoothing" happens on multiple levels as different memory spaces fill, hit a limit, then are garbage collected back down. This graph from Dynatraces About Performance blog post on Understanding Garbage Collection and hunting Memory Leaks in Node.js shows an app slowly increasing it's object space

Over time you should see which memory areas grow and which don't and that can provide you some context for what code is keeping object references. If you don't see any memory growth in the heap you may have a real memory leak, either in native modules or Node.js itself.

Process Memory

The memory a node application is reporting as used by the garbage collector, called the "heap", doesn't always match what the OS is reporting for the process. The OS can have a larger amount of memory allocated that Node.js might not currently be using. This can be normal, if the OS isn't under memory pressure and Node.js garbage collects objects there will be a difference as the OS retains the extra memory space for the process. This can also be abnormal when something is leaking and the allocated process memory continually grows.

Gather some OS memory information along with your app

node --trace_gc app.js &

pid=$!

while sleep 15; do

pss=$(sudo awk '/^Pss/{p=p+$2}END{print p}' /proc/$pid/smaps)

echo "$(date) pss[$pss]"

done

This will give you a date and memory value in bytes in line with your GC output to compare:

[531:0x101804a00] 12539 ms: Scavenge 261.6 (306.4) -> 246.6 (307.4) MB, 5.0 / 0 ms [allocation failure].

Tue Aug 30 12:34:46 UTC 2016 pss[3396192]

The Pss figure in smaps takes account of shared memory duplication so is more accurate than the Rss figure used by ps

Heapdumps

If you do find the garbage collection log memory matching the OS process memory growth then it's likely the application, or a module it depends on is retaining references to old objects and you will need to start looking at heap dumps to identify what those objects are.

Use Chrome dev tools and run your app in debug mode so you can attach to it and create a heap dumps.

node --inspect app.js

You will see a little Node icon top left when a Node process is detected, click that to open a Devtools window dedicated to the node app. Take a heap snapshot just as the app has started. Somewhere in the middle of the memory growth. Then close to when it's at the maximum (before crashing!).

Compare the 3 snapshots looking for large objects or heavily repeated object references in the later two dumps compared to the first.

Native leaks

If the GC logs don't show an increase in memory but the OS does for the node process, it might be a native leak.

OSX has a useful dev tool called leaks that can find unreferenced memory in a process without full debugging. I believe valgrind can do the same type of thing with --leak-check=yes.

These might not identify what is wrong, just confirm the problem is a native leak.

Modules

To find native modules in your app use the following command:

find node_modules -type f -name "*.node"

For each of these modules, produce a small test case of how each module is used in your application and stress test it while checking for leaks.

You can also try excluding or disabling any of the native modules from your application temporarily to rule them out or highlight them as a cause

Node.js

If possible, try a different release of Node.js. Move to the latest major version, latest minor/patch, or back a major version to see if anything improves or changes in the memory profiles.

There are vast improvements in general memory management in the v4.x or v6.x Node.js releases from v0.x. If you can update, the issue might go away via a bug fix or a V8 update.