I would like to create a basic CUDA application to demonstrate the memory transfer/kernel execution overlapping for students. But using the nvvp, it seems that there is no concurrent execution. Can you help me what is wrong?

The full source (Visual Studio 2015, CUDA 8.0, sm3.5,arch3.5, Titan X card):

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <malloc.h>

#include <stdio.h>

#define MEMSIZE 8000000

#define STREAM_N 8

__global__ void TestKernel(char *img)

{

int pos = blockIdx.x * blockDim.x + threadIdx.x;

for (int k = 0; k < 100; k++)

img[pos] = img[pos] / 2 + 128;

}

int main()

{

// allocate memory and streams

char *img[STREAM_N];

char *d_img[STREAM_N];

cudaStream_t streams[STREAM_N];

for (int pi = 0; pi < STREAM_N; pi++)

{

cudaMalloc((void**)&d_img[pi], MEMSIZE / STREAM_N);

cudaMallocHost((void**)&img[pi], MEMSIZE / STREAM_N);

cudaStreamCreate(&streams[pi]);

}

// process packages one way

cudaError_t stat;

for (int pi = 0; pi < STREAM_N; pi++)

cudaMemcpyAsync(d_img[pi], img[pi], MEMSIZE / STREAM_N, cudaMemcpyHostToDevice, streams[pi]);

for (int pi = 0; pi < STREAM_N; pi++)

TestKernel <<< MEMSIZE / STREAM_N / 400, 400, 0, streams[pi] >>>(d_img[pi]);

for (int pi = 0; pi < STREAM_N; pi++)

cudaMemcpyAsync(img[pi], d_img[pi], MEMSIZE / STREAM_N, cudaMemcpyDeviceToHost, streams[pi]);

// process packages another way

for (int pi = 0; pi < STREAM_N; pi++)

{

cudaMemcpyAsync(d_img[pi], img[pi], MEMSIZE / STREAM_N, cudaMemcpyHostToDevice, streams[pi]);

TestKernel <<< MEMSIZE / STREAM_N / 400, 400, 0, streams[pi] >>>(d_img[pi]);

cudaMemcpyAsync(img[pi], d_img[pi], MEMSIZE / STREAM_N, cudaMemcpyDeviceToHost, streams[pi]);

}

cudaDeviceSynchronize();

// destroy streams and free memory

for (int pi = 0; pi < STREAM_N; pi++)

{

cudaStreamDestroy(streams[pi]);

cudaFreeHost(img[pi]);

cudaFree(d_img[pi]);

}

}

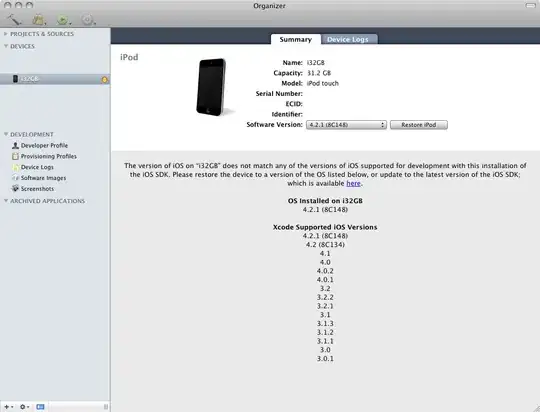

And the visual profiler output: