This probably allows checking epsilon with a relative error rather than an absolute error.

Compare these two cases:

function areEqual(float $a, float $b) : bool {

return abs(($a - $b) / $b) < 0.00001;

}

areEqual(10000, 10000.01);

areEqual(0.0000001, 0);

Fact about the example values above: Our epsilon here is 0.00001 for convenience ‐ the smallest epsilon possible is much smaller than these values anyway, so let's ignore this fact. Our algorithm assumes that $a and $b are both similar, so it does not matter whether we divide by $a or $b. Actually, 10000 should be much larger than that (a very enormous exponent), and 0.0000001 can be much smaller, but for the sake of convenience, let's assume these are the values that may cause problems.

Now you can already see the difference.

For the large numbers: If the compared floats are extremely large, epsilon may be too small. The float internally can only store a definite number of digits for precision, while the exponent can be way greater than that. As a result, the source of floating point error, i.e. the final digits of floats, would appear at somewhere that can be higher than the unit digits. In other words, for extremely large floats, the absolute error can be greater than 1, much less our epsilon of 0.00001.

For the small numbers: This is even more obvious. Both numbers are smaller than the epsilon already. Even if you compare them with 0, while the relative error is infinitely large, you still think that they are equal. For this case, you either multiply up both operands, or you decrease the epsilon. They are actually the same, but in terms of implementation, it is more convenient to divide the difference with one of the operands, which will multiply up for small numbers (/ 0.0001 is equivalent to * 10000) or divide down for large numbers (/ 10000 while the difference is hopefully way smaller than 10000)

There is another name for this check. While abs($a - $b) is called the absolute error, we usually use the relative error, which is absolute error ÷ approximate value. Since the values can be negative as well, we abs the whole thing ($a - $b) / $b instead. Our "epsilon", 0.00001, in this case, means that our tolerate relative error is 0.00001, i.e. 0.001% error.

Keep in mind that this is

still not absolutely safe. After numerous transformations in your program, you may, for example, add/multiply your numbers with some big numbers, then subtract down again, leaving the impure error in the big numbers somewhere still negligible to humans but notable to your epsilon value. Therefore, always think twice before choosing an epsilon value or float comparison algorithm.

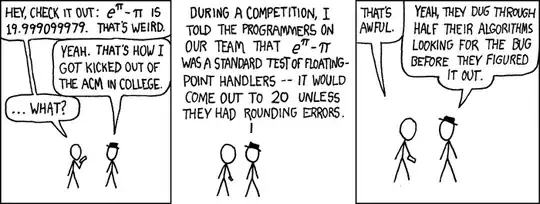

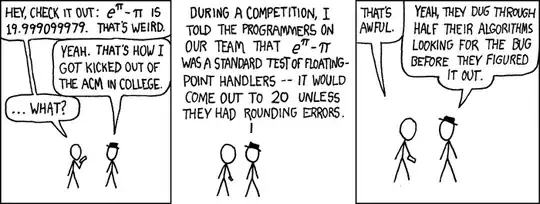

As the best practice, avoid adding, subtracting or multiplying big numbers with small numbers. They will increase the chance of errors. When developing (especially simplifying) your algorithms, always put into consideration that their might be an error in your floats. This may increase work load to a stupid extent, but as long as you are aware of it, this kind of worry sometimes saves you from getting kicked out of teams.