I'm trying to demonstrate that it's very bad idea to not use std::atomic<>s but I can't manage to create an example that reproduces the failure. I have two threads and one of them does:

{

foobar = false;

}

and the other:

{

if (foobar) {

// ...

}

}

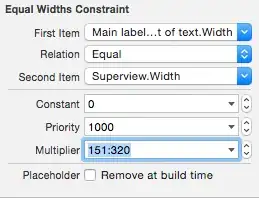

the type of foobar is either bool or std::atomic_bool and it's initialized to true. I'm using OS X Yosemite and even tried to use this trick to hint via CPU affinity that I want the threads to run on different cores. I run such operations in loops etc. and in any case, there's no observable difference in execution. I end up inspecting generated assembly with clang clang -std=c++11 -lstdc++ -O3 -S test.cpp and I see that the asm differences on read are minor (without atomic on left, with on right):

No mfence or something that "dramatic". On the write side, something more "dramatic" happens:

As you can see, the atomic<> version uses xchgb which uses an implicit lock. When I compile with a relatively old version of gcc (v4.5.2) I can see all sorts of mfences being added which also indicates there's a serious concern.

I kind of understand that "X86 implements a very strong memory model" (ref) and that mfences might not be necessary but does it mean that unless I want to write cross-platform code that e.g. supports ARM, I don't really need to put any atomic<>s unless I care for consistency at ns-level?

I've watched "atomic<> Weapons" from Herb Sutter but I'm still impressed with how difficult it is to create a simple example that reproduces those problems.