I am working on a GraphDB experiment to test if we can shift our relational data from T-SQL to GraphDB (using Neo4j). We are looking to work with large amounts of data which can benefit if we query a graph structure. At the moment we are viewing really low query performance even for some simple where clause and aggregation steps. It would be nice to obtain some advice on how we can achieve better performance since Neo4j claims to work on billions of nodes. Here is what all we have tried.

So, let me describe the data: We have customer data regarding the Countries (Geography) and the Products (SKUs) they have visited/purchased online. Every time a customer comes to the website, his views/purchases are tracked as a part of a unique session id, which changes after 30 minutes. We are trying to accurately calculate the number of visits a person has made to the website by calculating the distinct Session IDs.

We have about 26M rows of data relating to customer visits/purchases made when coming to the website. The data in SQL is in the following format:

----------------------------------------------------------------------------

| Date| SessionId| Geography| SKU| OrderId| Revenue| Units||

|--------|------------|------------|------|----------|-----------|--------||

|20160101| 111| USA| A| null| 0| 0||

|20160101| 111| USA| B| 1| 50| 1||

|20160101| 222| UK| A| 2| 10| 1||

----------------------------------------------------------------------------

Problem: We need to calculate the number of visits a customer makes to the site accurately. Visits are calculated as distinct session ids.

Explanation of Visit calculation logic: In the above model if we view the Visits where a person came to the site looking for SKU named "A", our answer would be 2. The first view in session 111 and second in session 222. Similarly if we want to know the number of visits where a person came to the site looking for SKU "A" or "B" then also the answer would be 2. This is so because in session 111, both the products were viewed but the total visits are 1 only. There are 2 product views in session 111, but there is only 1 visit. So counting the other visit from 222, we have a total of 2 visits still.

Here's model we have built: We have a fact node, one for each row present in the data. We have made distinct Geography and Product nodes, 400 and 4000 respectively. Each of these nodes have relationships to multiple facts. Similarly we have distinct nodes for Dates.

We created distinct nodes for Session ids and order ids too. Both of these point to the facts. So basically we have distinct nodes with following properties:

1) Geography {Locale, Country}

2) SKU {SKU, ProductName}

3) Date {Date}

4) Sessions {SessionIds}

5) Orders {OrderIds}

6) Facts {Locale, Country, SKU, ProductName, Date, SessionIds, OrderIds}

The relationship schema is based on matching property values and looks like :

(:Geography)-[:FactGeo]->(:Facts)

(:SKU)-[:FactSKU]->(:Facts]

(:Date)-[:FactDate]->(:Facts)

(:SessionId)-[:FactSessions]->(:Facts)

(:OrderId)-[:FactOrders]->(:Facts)

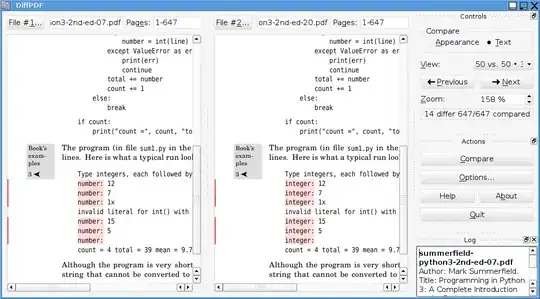

Here is a snapshot of the schema:

As some of you stated that a missing Index might be causing the issue, but there are all the indices which i would need and more. I am assuming that adding the extra indices that i don't query on mostly, would not lower the performance so significantly.

There are a total of 44M nodes, most of them are for Facts and SessionId nodes. There are 131M relationships.

If i try to query for identifying the distinct visits for people belonging to around 20 Countries and for around 20 Products, it takes about 44 seconds to get the answer. It takes SQL about 47 seconds (without indexing) for the same (when i have indices built in Neo4j). This is not the exceptional improvement that i hoped to obtain from using Neo4j, since I think building an index in SQL would give better performance.

The query i wrote was like this:

(geo: Geography)-[:FactGeo]->(fct: Facts)<-(sku: SKU)

WHERE geo.Country IN ["US", "India", "UK"...]

AND sku.SKU IN ["A","B","C".....]

MATCH (ssn: Sessions)-[:FactSessions]->(fct)

RETURN COUNT(DISTINCT ssn.SessionId);

When i use PROFILE, this results in approx 69M db hits:

Q1) Is there a way I can improve this model to have a better performing query? For example i can change the above model by removing the Session nodes and just counting the SessionIds present on Fact nodes as in the query below:

(geo: Geography)-[:FactGeo]->(fct: Facts)<-(sku: SKU)

WHERE geo.Country IN ["US", "India", "UK"...]

AND sku.SKU IN ["A","B","C".....]

RETURN COUNT(DISTINCT fct.SessionId);

Which happens because of the huge number of nodes and relationships between Facts and Sessions. So it seems that i would rather benefit from having SessionIds as a Property of Facts nodes.

When i use PROFILE, this results in approx 50M db hits:

Also, can someone help me understand the tipping point where it becomes difficult to scan nodes on the basis of properties as I increase the number of properties the nodes have?

Q2) Is there something wrong with my Neo4j configurations as it is taking 44 seconds? I have a 114GB ram for the java heap, but no SSD. I have not tweaked around with other configurations and would like to know if those could be the bottleneck over here as I was told that Neo4j could run on billions of nodes?

My Machine's Total RAM: 140GB RAM dedicated to Java heap: 114GB (From what I recollect, there was almost negligible performance increase as i moved from 64GB RAM to 114GB) Page Cache Size: 4GB Approximate GraphDB size: 45GB Neo4j Version i am using: 3.0.4 Enterprise Edition

Q3) Is there any better way to formulate a query which performs better? I tried the following query:

(geo: Geography)-[:FactGeo]->(fct: Facts)

WHERE geo.Country IN ["US", "India", "UK"...]

MATCH (sku: SKU)-[:FactSKU]->(fct)

WHERE sku.SKU IN ["A","B","C".....]

RETURN COUNT(DISTINCT fct.SessionId);

But it gives around the same performance and records the same number of DBhits as the slightly improved query in Q1.

When i use PROFILE, this results in approx 50M db hits, exactly same as the query in Q1:

Q4) If i modify my query from Q3 to as below, instead of seeing an improvement i see a major decrease in performance:

MATCH (geo: Geography)

WHERE geo.Country IN ["US", "India", "UK"...]

WITH geo

MATCH (sku: SKU)

WHERE sku.SKU IN ["A","B","C".....]

WITH geo, sku

MATCH (geo)-[:FactGeo]->(fct: Facts)<-[:FactSKU]-(sku)

RETURN COUNT(DISTINCT fct.SessionId);

This appears to be creating a cross join between the 400 Geography nodes and the 4000 sku nodes, and then testing each relationship to possible exist between one of those 1,600,000 possible relationship combinations. Am i understanding this correctly?

I know these are long questions and a very long post. But I have tried tirelessly for more than a week to work these things out on my own and i have shared some of my findings over here. Hopefully the community will be able to guide me with some of these queries. Thanks in advance for even reading the post!

EDIT-01: Tore, Inverse and Frank, Thanks a lot for trying to help me out guys, I hope we can figure out the root cause here.

A) I have added some more details, regarding my PROFILE results and also my SCHEMA and Machine/Neo4j config stats.

B) As i consider the model that @InverseFalcon suggested, and try to keep in mind the facts about relationships being a better choice and limiting the number of relationships.

I am tweaking Inverse's model a bit because I think we might be able to reduce it a bit. How is this for as a model:

(:Session)-[:ON]->(:Date)

(:Session)-[:IN]->(:Geography)

(:Session)-[:Viewed]->(:SKU)

(:Session)-[:Bought]->(:SKU)

OR

(:Session)-[:ON]->(:Date)

(:Session)-[:IN {SKU: "A", HasViewedOrBought: 1}]->(:Geography)

Now both of the models can have advantages. In the first one I maintain SKUs as distinct nodes and have different relationships between them to determine if it was a purchase or a view.

In the second one, i completely remove the SKU nodes adding them as relationships. I understand that this will lead to many relationships, but the number of relationships will still be small as we are also discarding all the nodes and relationships of SKU nodes which we are removing. We will have to test the Relationship by comparing the SKU strings, and that is an intensive operation and perhaps could be avoided by keeping only Session and Geography nodes and removing the Date nodes and adding Date property to the SKU relationships. As below:

(:Session)-[:ON]->(:Date)

(:Session)-[:IN {Date: {"2016-01-01"}, SKU: "A", HasViewedOrBought: 1}]->(:Geography)

But then I would be testing the relationships between the Geography and SKU nodes on the basis of two properties, both of which are strings. (Arguably date can be converted to integer, but still i see we have another face-off between alternate models)

C) @Tore, thanks for explaining and confirming my understanding of Q4. But if the GraphDB does a calculation like that, in which it joins and compares every relation with that join, isn't it actually working in the same manner an RDBMS should? It is ineffective in utilizing the graph traversals that it should easily be able to do by finding direct paths between the two set of Geography and Product nodes. This seems to be a bad implementation to me?