The Problem Size

Let's first consider the worst case:

You have 10 columns and 5 (full) rows per column. It should be clear that you will be able to get (with the appropriate number population for each place) up to 5^10 ≅ 10^6 different results (solution space).

For example, the following matrix will give you the worst case for 3 columns:

| 1 10 100 |

| 2 20 200 |

| 3 30 300 |

| 4 40 400 |

| 5 50 500 |

resulting in 5^3=125 different results. Each result is in the form {a1 a2 a3} with ai ∈ {1,5}

It's quite easy to show that such a matrix will always exist for any number n of columns.

Now, to get each numerical result, you will need to do n-1 sums, adding up to a problem size of O(n 5^n). So, that's the worst case and I think nothing can be done about it, because to know the possible results you NEED to effectively perform the sums.

More benign incarnations:

The problem complexity may be cut off in two ways:

- Less numbers (i.e. not all columns are full)

- Repeated results (i.e. several partial sums give the same result, and you can join them in one thread). Much more in this later.

Let's see a simplified example of the later with two rows:

| 7 6 100 |

| 3 4 200 |

| 1 2 200 |

at first sight you will need to do 2 3^3 sums. But that's not the real case. As you add up the first column you don't get the expected 9 different results, but only 6 ({13,11,9,7,5,3}).

So you don't have to carry your nine results up to the third column, but only 6.

Of course, that is on the expense of deleting the repeating numbers from the list. The "Removal of Repeated Integer Elements" was posted before in SO and I'll not repeat the discussion here, but just cite that doing a mergesort O(m log m) in the list size (m) will remove the duplicates. If you want something easier, a double loop O(m^2) will do.

Anyway, I'll not try to calculate the size of the (mean) problem in this way for several reasons. One of them is that the "m" in the sort merge is not the size of the problem, but the size of the vector of results after adding up any two columns, and that operation is repeated (n-1) times ... and I really don't want to do the math :(.

The other reason is that as I implemented the algorithm, we will be able to use some experimental results and save us from my surely leaking theoretical considerations.

The Algorithm

With what we said before, it is clear that we should optimize for the benign cases, as the worst case is a lost one.

For doing so, we need to use lists (or variable dim vectors, or whatever can emulate those) for the columns and do a merge after every column add.

The merge may be replaced by several other algorithms (such as an insertion on a BTree) without modifying the results.

So the algorithm (procedural pseudocode) is something like:

Set result_vector to Column 1

For column i in (2 to n-1)

Remove repeated integers in the result_vector

Add every element of result_vector to every element of column i+1

giving a new result vector

Next column

Remove repeated integers in the result_vector

Or as you asked for it, a recursive version may work as follows:

function genResVector(a:list, b:list): returns list

local c:list

{

Set c = CartesianProduct (a x b)

Set c = Sum up each element {a[i],b[j]} of c </code>

Drop repeated elements of c

Return(c)

}

function ResursiveAdd(a:matrix, i integer): returns list

{

genResVector[Column i from a, RecursiveAdd[a, i-1]];

}

function ResursiveAdd(a:matrix, i==0 integer): returns list={0}

Algorithm Implementation (Recursive)

I choose a functional language, I guess it's no big deal to translate to any procedural one.

Our program has two functions:

- genResVector, which sums two lists giving all possible results with repeated elements removed, and

- recursiveAdd, which recurses on the matrix columns adding up all of them.

recursiveAdd, which recurses on the matrix columns adding up all of them.

The code is:

genResVector[x__, y__] := (* Header: A function that takes two lists as input *)

Union[ (* remove duplicates from resulting list *)

Apply (* distribute the following function on the lists *)

[Plus, (* "Add" is the function to be distributed *)

Tuples[{x, y}],2] (*generate all combinations of the two lists *)];

recursiveAdd[t_, i_] := genResVector[t[[i]], recursiveAdd[t, i - 1]];

(* Recursive add function *)

recursiveAdd[t_, 0] := {0}; (* With its stop pit *)

Test

If we take your example list

| 1 1 7 9 1 1 |

| 2 2 5 2 2 |

| 3 3 |

| 4 |

| 5 |

And run the program the result is:

{11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27}

The maximum and minimum are very easy to verify since they correspond to taking the Min or Max from each column.

Some interesting results

Let's consider what happens when the numbers on each position of the matrix is bounded. For that we will take a full (10 x 5 ) matrix and populate it with Random Integers.

In the extreme case where the integers are only zeros or ones, we may expect two things:

- A very small result set

- Fast execution, since there will be a lot of duplicate intermediate results

If we increase the Range of our Random Integers we may expect increasing result sets and execution times.

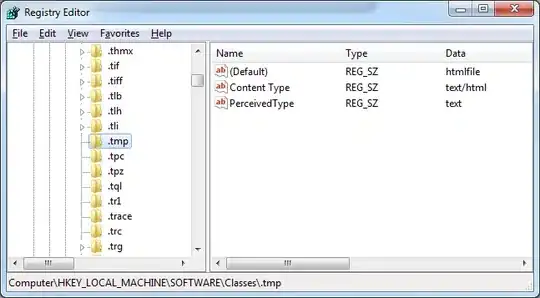

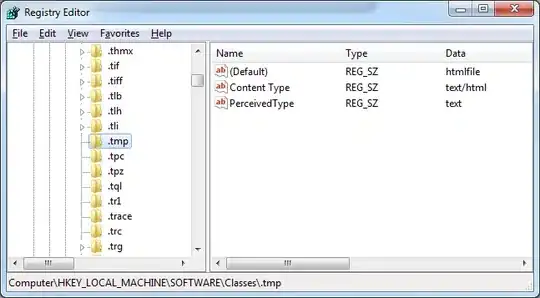

Experiment 1: 5x10 matrix populated with varying range random integers

It's clear enough that for a result set near the maximum result set size (5^10 ≅ 10^6 ) the Calculation time and the "Number of != results" have an asymptote. The fact that we see increasing functions just denote that we are still far from that point.

Morale: The smaller your elements are, the better chances you have to get it fast. This is because you are likely to have a lot of repetitions!

Note that our MAX calculation time is near 20 secs for the worst case tested

Experiment 2: Optimizations that aren't

Having a lot of memory available, we can calculate by brute force, not removing the repeated results.

The result is interesting ... 10.6 secs! ... Wait! What happened ? Our little "remove repeated integers" trick is eating up a lot of time, and when there are not a lot of results to remove there is no gain, but looses in trying to get rid of the repetitions.

But we may get a lot of benefits from the optimization when the Max numbers in the matrix are well under 5 10^5. Remember that I'm doing these tests with the 5x10 matrix fully loaded.

The Morale of this experiment is: The repeated integer removal algorithm is critical.

HTH!

PS: I have a few more experiments to post, if I get the time to edit them.